Laplace's Demon

“I used to say of him that his presence on the field made the difference of forty thousand men.”

~Arthur Wellesley,

1st Duke of Wellington

speaking of Napoleon Bonaparte

War is hell

What is the best way to wage war? What do all great generals have in common that bad generals lack? What strategy will ensure that your forces win and the other side loses? In How Great Generals Win, military historian and veteran of the Korean War Bevin Alexander (2002) makes the case that what truly sets apart great generals is their disposition to only attack the flanks (sides) and rear of the opponent and the poise to not engage in a full frontal attack. Full frontal attacks, such as the kind that were repeatedly attempted in WWI, rarely lead to a decisive victory and instead simply increase the casualties on both sides. Bevin acknowledges that there are other requirements for being successful in war, such as having up-to-date armaments, getting to the theater of battle early and with sufficient forces, having reliable intelligence on the operations of the enemy, and mistifying and confusing your opponent as much as possible. But all things being equal, Alexander argues that attacking the flanks and rear is what most successful generals have done in most of the battles that they've won. One example that Alexander gives of a general who employs this strategy is Napoleon Bonaparte (1769-1821).

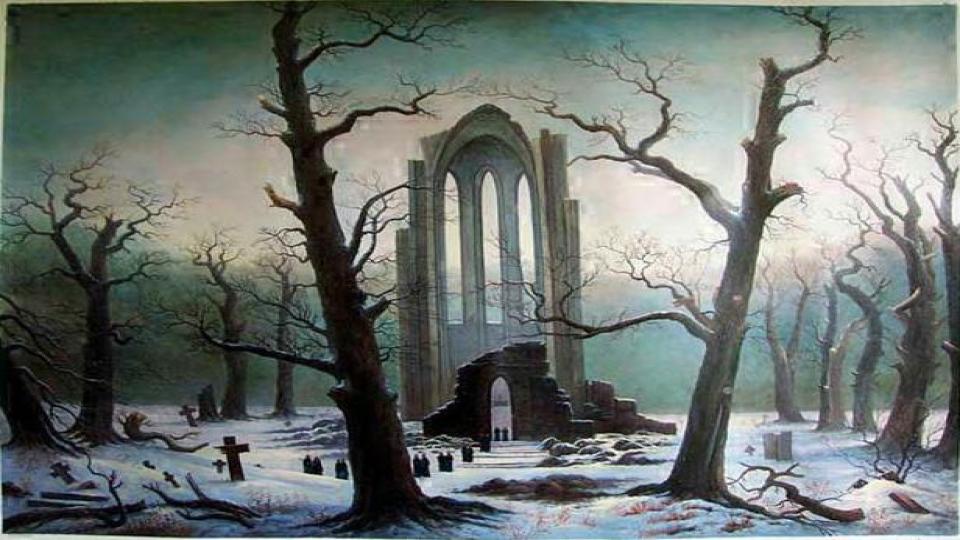

Let's begin with some context. As you recall, the Thirty Years' War (1618-1648) desolated large portions of Western Europe and caused the deaths of about 8 million people, including about one-fifth of the German population. Thousands of towns and villages were burned and abandoned. At the conclusion of the conflict, the map of Europe had been altered, and absolute kings gained control of the devastated territories. The kings that ruled these territories were set upon stopping the depredation, and they established professional standing armies that were kept separate from the general population. These professional armies had a lamentable combination of traits: they were both extremely expensive and extremely untrustworthy. These were expensive because they had to be kept on war footing at all times. There was essentially never any demobilization; they were always ready for war. The soldiers, however, were recruited from the dregs of society. They were violent and prone to desertion. The generals kept a constant watch on them, apparently not even letting them bathe without supervision. Because of their expense and their unreliable nature, these armies were kept small and military tactics were formed around these constraints.

But things were about to change. The writings of thinkers like Jean-Jacques Rousseau (1712-1778) challenged the aristocracy and argued instead for democracy and freedom. Indeed, in one nation in particular these ideas led to the overthrow of the monarchy and the institution of a republic. This is, of course, France. Although the French Revolution deserves a whole course onto itself, here's what we can say about how it affected war. Since the soldiers were no longer merely subjects—but citizens—there was an increased loyalty to the state. Since they were, in a sense, the state, a type of worship of the state began. Nationalism was born. Now the soldiers could be trusted to not desert, to be committed to the cause of the nation-state. Armies could grow in size and were more maneuverable. Into these circumstances entered a military genius who would transform warfare.

A Gribeauval cannon

from the Napoleonic era.

Per Alexander (2002, chapter 3), Napoleon's favorite tactic was the strategic battle. Napoleon would first attack frontally with enough determination to fool his enemy into thinking that he intended to breakthrough. Napoleon would then send a second, large force to attack the flank of the enemy. This would cause the enemy to divert forces from their center to the flank being attacked. Since the enemy had to send reinforcements to the flank hastily, these were usually taken from the part of the center closest to the flank being attacked. This created a weak part in the center—again, the part closest to the flank being attacked. And so, the third part of this strategy is to unleash an artillery attack that had been hidden opposite the part of the center that is now weak. Napoleon knew this portion would be weak, since he had planned on attacking the flank near it and hence knew the enemy would pull men from this region. After the artillery attack, Napoleon would concentrate forces and push through the weakened part of the center. Speaking very loosely, Napoleon faked a frontal attack, then faked a lateral attack, and then pushed through the weakened part between the faked frontal and the faked lateral attack.1

This was, of course, not the only strategy that made Napoleon militarily successful. Moreover, the strategic battle was described ideally above, but as Prussian military commander Helmuth van Moltke said, "No plan survives first contact with the enemy." This appears to be true in all types of combat. Mike Tyson is quoted as saying, "Everybody has a plan until they get punched in the mouth." And so, Napoleon's strategic battle is hardly ever executed in pristine form, but this is not the place to give those details. Lastly, it seems Napoleon did not always follow his own strategic recommendations. After he had become emperor, Alexander claims that Napoleon no longer attempted to win battles through guile, speed, and deception; instead, he purchased victories at the cost of human lives.

Nonetheless, Napoleon is remembered for his genius in battle, even if it was inconstant throughout his life. It should come as no surprise that he was taught by some of the best. During his time in the École Militaire, he came into contact with one of the greatest mathematicians of the era: Pierre-Simon Laplace (1749-1827). Laplace was the first unambiguous proponent of a view called determinism. We'll cover this view later in this lesson.

But before leaving Napoleon, let's consider for a moment what we can learn about Napoleon's military skill when we use quantitative methods. In other words, let's look at what mathematical techniques can teach us about Napoleon. Apparently, he was really a force to be reckoned with.

“Using the methods developed by military historians… it is possible to do a statistical analysis of the battle outcomes. Taking into account various factors, such as the numbers of men on each side, the armaments, position, and tactical surprise (if any), the analysis shows that Napoleon as commander acted as a multiplier, estimated as 1.3. In other words, the presence of Napoleon was equivalent to the French having an extra 30 percent of troops... Most likely, all of these factors were operating together, and we cannot distinguish between them with data. We do know, however, that the presence of Napoleon had a measurable effect on the outcome” (Turchin 2007: 314-15).

The use of statistical methods were, in fact, popularized by Napoleon's teacher, Laplace. Laplace did this by writing non-technical, popular essays on mathematical ideas. As Laplace's methods spread, scientists were fascinated by how all human actions become more predictable when you use statistical methods; everything seemed to fall into accordance with a (statistical) natural law. To these scientists, as well as to many non-scientists, this pointed towards a concerning idea: that maybe humans don't have free will. Indeed, soon after Laplace, the field of social physics is proposed, a field that understood the regularities in things like suicide rate and crime as the products of social conditions. In this way of viewing things, criminals aren’t doing things of their own free will; their actions are determined instead by their social conditions. Human actions, in other words, are just the product of natural law—not of human thoughts and desires. Stay tuned.

Carrying on

with the Cartesian project

“...Work on gravitation [by Newton, 1643-1727] presented mankind with a new world order, a universe controlled throughout by a few universal mathematical laws which in turn were derived from a common set of mathematically expressible physical principles. Here was a majestic scheme which embraced the fall of a stone, the tides of the oceans, the moon, the planets, the comets which seemed to sweep defiantly through the orderly system of planets, and the most distant stars. This view of the universe came to a world seeking to secure a new approach to truth and a body of sound truths which were to replace the already discredited doctrines of medieval culture. Thus it was bound to give rise to revolutionary systems of thought in almost all intellectual spheres. And it did...” (Kline 1985: 359; interpolation is mine).

When we last left off, we were exploring the ideas of Blaise Pascal, who died in 1662, and now we find ourselves suddenly catapulted to the early 19th century. Why? Well, the main reason is that we are attempting to salvage the Cartesian project. If we can somehow establish God's existence, as Descartes attempted, then perhaps we can defend foundationalism over Locke's indirect realism and Bacon-inspired positivism. However, the most pressing threat to belief in the existence of God comes from the problem of evil argument. And so, before advancing, we must solve the problem of evil. One frequently suggested solution to the problem of evil is the free will solution, the view that it is human free will that causes unnecessary suffering in the world. Although this solution doesn't really account for suffering caused by, for example, natural disasters—since it is nowadays unreasonable to assume that human actions cause, say, volcanic eruptions—there is a lot of suffering that is definitely caused by human actions. So the free will solution should be explored, and this is why we have jumped forward to the 19th century: a very credible threat to the free will solution manifests itself in 1814.

Before discussing the threat to free will, however, allow me to give you one bit of context. Now in the 18th century, we find ourselves in a period called The Enlightenment. Just when this era began is disputed. Some argue that it begins with Descartes' writings, since Descartes was the first to unambiguously formulate the principle that is now known as Newton's first law of motion. Many (if not most) instead claim that the Enlightenment began when Isaac Newton publishes his Principia Mathematica in 1687. Regardless of when it started, this intellectual movement further weakened an already reeling Catholic Church, it undermined the authority of the monarchy and the notion of the divine right of kings, and it paved the way for the political revolutions in the late 1700s, including the American and French revolutions. Right in the middle of this intellectual upheaval came a man named Laplace.

Pierre-Simon Laplace (1749-1827) was a French polymath who made sizable contributions to mathematical physics, probability theory, and other subfields. He even suggested that there could be massive stars whose gravity is so great that not even light could escape from their surface. This is, of course, a black hole. In any event, we come to his work for one very important reason. In 1814, in a work titled A Philosophical Essay on Probabilities, Laplace wrote the following:

"We may regard the present state of the universe as the effect of its past and the cause of its future. An intellect which at a certain moment would know all forces that set nature in motion, and all positions of all items of which nature is composed, if this intellect were also vast enough to submit these data to analysis, it would embrace in a single formula the movements of the greatest bodies of the universe and those of the tiniest atom; for such an intellect nothing would be uncertain and the future just like the past would be present before its eyes" (p. 4).

Pierre-Simon Laplace

(1749-1827).

Two important ideas are found in this passage. The first is the unambiguous articulation of determinism, the view that all events are caused by prior events in conjunction with the laws of nature; i.e., the view all events are forced upon us by past events plus the laws of physics. The second is the introduction of the idea that some sufficiently powerful intellect could very well predict and retrodict everything that will happen and everything that has happened. This intellect would, in fact, know every single action you'll ever take. This is Laplace's demon.

Just as God's omniscience seemed to threaten free will, it's seems like the very nature of causation—the relationship between cause and effect—leaves no room for human free will. It's even more complicated, though. Whereas not everyone believes in God, it does appear that all rational persons must believe that there is a natural order to the universe; in other words, there is a "way the universe works". If this "way the universe works" is in fact captured by the notion of determinism, then there appears to be no way to defend the view that humans have free will. This is because if every event that ever happened had to happen, then this includes your choices. This means that your choices didn't really come from you; they are just the result of the laws of nature working on the initial state of the universe. Free will is an illusion.2

However, it certainly seems like free will exists. It certainly seems like when I go to the ice cream shop, the act of choosing mint chocolate chip comes from me—not from the Big Bang and the laws of nature.

Moreover, at least at first glance, it really does seem like human free will and determinism are incompatible. In other words, it does appear to be the case that if determinism is true, then human free will is an illusion. Alternatively, it seems that if humans do have free will, then determinism must be false (because at least some events, i.e., human choices, are not determined by the laws of nature and the initial state of the universe).

Our intuitions about the nature of the universe and our capacity to make free choices appear to be at odds with each other. This is, in a nutshell, the next problem we must tackle. This, then, is dielemma #4: Do we have free will?

Important Concepts

Decoding Laplace's Demon

Question:

Is determinism true?

“The first flowering of modern physical science reached its culmination in 1687 with the publication of Isaac Newton’s Principia, thereafter mechanics was established as a mature discipline capable of describing the motions of particles in ways that were clear and deterministic. So complete did this new science seem to be that by the end of the 18th century the greatest of Newton’s successors, Pierre Simon Laplace, could make his celebrated assertion that a being equipped with unlimited calculating powers and given complete knowledge of the dispositions of all particles at some instant of time could use Newton’s equations to predict the future and to retrodict, with equal certainty, the past of the whole universe. In fact, this rather chilling mechanistic claim always had a strong suspicion of hubris about it” (Polkinghorne 2002: 1-2).

Non-Euclidean geometry

Although determinism was not the first threat to free will—recall the problem of divine foreknowledge—it did carry a lot of weight in the scientific community. As such, it was a threat that had to be dealt with. Much ink has been spilled on this topic. Alas, confidence in the truth of determinism began to wane in two distinct time periods.

Projecting a sphere to a plane.

First, in the same decade in which Napoleon was being dethroned (for the second time), two mathematicians (Carl Friedrich Gauss and Ferdinand Karl Schweikart) were independently working on something that would eventually be called non-Euclidean geometry. Neither published their results, but they continued to work on this new type of geometry. Several other famous mathematicians enter the picture and by the 1850s there are several kinds of non-Euclidean geometries, including Bolyai-Lobachevskian geometry and Riemannian geometry. In all honesty, it is hard to convey the shockwave that non-Euclidean geometry sent through scientific circles (although this video might help). Instead, I'll just say that the discovery of non-Euclidean geometries forced many to rethink their understanding of nature. Here's how Morris Kline summarizes the event:

“In view of the role which mathematics plays in science and the implications of scientific knowledge for all of our beliefs, revolutionary changes in man’s understanding of the nature of mathematics could not but mean revolutionary changes in his understanding of science, doctrines of philosophy, religious and ethical beliefs, and, in fact, all intellectual disciplines... The creation of non-Euclidean geometry affected scientific thought in two ways. First of all, the major facts of mathematics, i.e., the axioms and theorems about triangles, squares, circles, and other common figures, are used repeatedly in scientific work and had been for centuries accepted as truths—indeed, as the most accessible truths. Since these facts could no longer be regarded as truths, all conclusions of science which depended upon strictly mathematical theorems also ceased to be truths... Secondly, the debacle in mathematics led scientists to question whether man could ever hope to find a true scientific theory. The Greek and Newtonian views put man in the role of one who merely uncovers the design already incorporated in nature. However, scientists have been obliged to recast their goals. They now believe that the mathematical laws they seek are merely approximate descriptions and, however accurate, no more than man’s way of understanding and viewing nature... The most majestic development of the 17th and 18th centuries, Newtonian mechanics, fostered and supported the view that the world is designed and determined in accordance with mathematical laws... But once non-Euclidean geometry destroyed the belief in mathematical truth and revealed that science offered merely theories about how nature might behave, the strongest reason for belief in determinism was shattered” (Kline 1967: 474-475).

Here's the way I like to summarize things. Mathematics had seemed to intellectuals like a gateway to the realm of truth, like an undeniable fact of reason, like something that could be known with certainty. But further developments in mathematics made the whole enterprise seems more so like a set of systems that we invent, and we invent them in ways that are useful to us. So, some began to believe that it's not that mathematics is a gateway to undeniable truth; it's that we invent mathematics in ways that are useful and this makes it seem like a gateway to undeniable truth. For this reasons, the idea that all physical events are governed by natural laws that could be expressed mathematically, determinism, just didn't seem like objective fact anymore. Physicists had just made theories and mathematical formulations that made it seem that way.

Quantum mechanics

The second event that undermined determinism is the advent of quantum mechanics (QM). QM is even more complicated than non-Euclidean geometry, but DeWitt (2018, chapter 26) helpfully distinguishes three separate topics of discussion relating to QM:

- Quantum facts, i.e., experimental results involving quantum entities, such as photons, electrons, etc.

- Quantum theory, i.e., the mathematical theory that explains quantum facts.

- Interpretations of quantum theory, i.e., philosophical theories about what sort of reality quantum theory suggests.

I cannot possibly explain the details of QM here. What is most relevant is the following. First there's this quantum fact: electrons and photons behave like waves, unless they are observed, in which case they behave like particles. This is called the observer effect. Also of relevance is the type of mathematics involved in QM. The mathematics used in predicting the movement of a cannonball is discrete mathematics, or particle mathematics; this is the kind of mathematics that Galileo and Descartes would've been familiar with. The mathematics used for quantum theory is wave mathematics. If this is all too much, don't worry. This is a puzzle that is still unresolved today. To understand why, please watch this cheesy but helpful video:

Why is this relevant? QM also undermines determinism. Physicist Brian Greene explains:

“We have seen that Heisenberg’s Uncertainty Principle undercuts Laplacian determinism because we fundamentally cannot know the precise positions and velocities of the constituents of the universe. Instead, these classical properties are replaced by quantum wave functions, which tell us only the probability that any given particle is here or there, or that it has this or that velocity” (Greene 2000: 341, emphasis added; see also Holt 2019, chapter 18).

In short, the dream of being able to predict future states of affairs with perfect knowledge evaporates. This is because it looks like reality, whatever it is, has "chanciness" built into it. In other words, it looks like, try as we might, deterministic predictions are not possible when it comes to the tiny stuff that we're all made out of.

And it doesn't even matter...

If you are rejoicing over the apparent downfall of determinism, you should hang on for a second. The version of causation that replaces determinism is strange, very open to interpretation, and seemingly random. Many thinkers feel that, whether it be determinism or quantum indeterminism, there is no theory of causation that leaves room for human free will.

The Dilemma of Determinism

- If determinism is true, then our choices are determined by factors over which we have no control.

- If indeterminism is true, then every choice is actually just a chance, random occurrence; i.e., not free will.

- But either determinism is true or indeterminism is true.

- Therefore, either our choices are determined or they are a chance occurrence; and neither of those is free will.

I close with an ominous quote:

“The electrochemical brain processes that result in murder are either deterministic or random or a combination of both. But they are never free. For example, when a neuron fires an electric charge, this either may be a deterministic reaction to external stimuli or it might be the outcome of a random event, such as the spontaneous decomposition of a radioactive atom. Neither option leaves any room for free will. Decisions reached through a chain reaction of biochemical events, each determined by a previous event, are certainly not free. Decisions resulting from random subatomic accidents aren’t free either; they are just random” (Harari 2017: 284).

To be continued...

FYI

Suggested Reading: A.J. Ayer, Freedom and Necessity

TL;DR: Crash Course, Determinism vs Free Will

Link: Student Health Center Info and Link

Supplementary Material—

-

Video: Big Think, Michio Kaku: Why Physics Ends the Free Will Debate

-

Video: Closer to Truth, Do Humans Have Free Will?

-

Video: Crash Course, Quantum Mechanics - Part 1

Related Material—

-

Video: Epic History TV, Napoleon’s Masterpiece: Austerlitz 1805

-

Video: Insights into Mathematics, Non-Euclidean geometry | Math History | NJ Wildberger

-

Video: Scene from Waking Life

Advanced Material—

-

Reading: Pamela Huby, The First Discovery of the Freewill Problem

-

Reading: Ian Hacking, Nineteenth Century Cracks in the Concept of Determinism

-

Reading: Susan Wolf, Asymmetrical Freedom

-

Reading: Shaun Nichols, The Rise of Compatibilism

Footnotes

1. Alexander Bevin makes two points that are of interest here. First, he claims that Carl von Clausewitz, a general and military theorist, completely misunderstood the main lesson of Napoleon's victories. This is important because Clausewitz's work was essentially the "bible" for German strategy during WWI, and this explains why the Germans used wrongheaded tactics during that conflict. Second, he points out that Napoleon didn't conceive of any of the strategies that he employed; rather Napoleon combined the strategies of many military theorists that came before him. This reminds me a bit of the technology company Apple. Apple didn't invent GPS, or touch-screen, or the internet, or any of the features that make a phone "smart". Apple merely integrated all these technologies (see chapter 4 of Mariana Mazzucato's 2015 The Entrepreneurial State).

2. This is not the first time in history that the reality of free will has been called into question. Two thousand years earlier, philosophers were already doubting our capacity to make free choices. “Epicurus [341-270 BCE] was the originator of the freewill controversy, and that it was only taken up with enthusiasm among the Stoics by Chrysippus [279-206 BCE], the third head of the school” (Huby 1967: 358).