Introduction to Course

The limits of my language mean the limits of my world.

~Ludwig Wittgenstein

Beginnings...

Students hardly know what to expect when they begin this course. It's completely understandable. Formal languages are hardly ever taught as formal languages. In other words, up until now, you've probably never been explicitly taught a formal language. But that is precisely what will happen in this course.

What is a formal language?

A formal language differs from a natural language in that natural languages tend to be much more fluid and, in a way, ambiguous. I sometimes marvel about how the phrase "Not bad" can be interpreted in multiple ways. If someone says this to you after you asked them how a movie is, then you can interpret them as saying, "The movie is good." If, however, you are debating how good a movie is, someone might say this phrase emphasizing the second half: "It's not bad." Now they're suggesting that, sure, maybe it's predictable or maybe it's slow in the beginning, but overall it's not (that) bad of a movie. And sometimes(!), if you say "Not bad" with a certain wink in the eye, you don't just mean that it's good; you imply that it's really good. A person who is attempting to learn American English as a second-language would be forgiven if they can't quite grasp the meaning of the common expression "Not bad".

Natural languages also have funny syntax. Notice in the paragraph above that I included an exclamation point in parentheses in the middle of a sentence. This was placed there to emphasize that this is surprising. Notice that as you read it, you weren't confused about the overall meaning. You might've been slightly puzzled, but you didn't lose track of what was going on. I also inconsistently placed the period at the end of a quote: sometimes I put the period within the quotes and sometimes outside. But were you confused at any point? Probably not.

Formal languages lack these traits. For every string and operator in a formal language, terms which will be defined later, there is one and only one predetermined meaning. Moreover, if you violate a rule of grammar, like I did with the periods and quotation marks, then suddenly the expression is incoherent. Formal languages are far more strict than natural language. Unnaturally strict, in fact. It will be difficult to learn, but you'll gain insight into the way that many disciplines and technologies of the 21st century work; you'll learn the type of language that they use.

Technically, you've already learned a formal language, although they likely didn't emphasize the formal aspect of it. However, as you were acquiring your mathematical knowledge, you were learning that certain operators work in certain ways and not in others; for example, the symbol "+" is for adding. You also learned that there are rules of grammar or "syntax rules" in mathematics. You know intuitively that the expression "4 ÷=", perhaps read as "Four divided to the power of equal sign"(?), makes absolutely no sense.1

In this class, you'll be explicitly learning a formal language. In a nutshell, this was a language that was developed to answer the following question: If I know these things, do I also know this? Of course, you might want a more technical definition of logic. Let me try that now.

What is logic?

According to Herrick (2013), logic is the systematic study of the standards of correct reasoning; i.e., it is the study of the principles our reasoning should follow (p. 3). According to Hurley (1985), logic may be defined as the science that evaluates arguments; i.e., to develop a system of methods and principles that we may use as criteria for evaluating the arguments of others and as guides in constructing arguments of our own (p. 1). According to Bergmann, Moor, and Nelson (2013), what logic really does, in other words the hallmark of deductive logic, is truth-preservation. Reasoning that is acceptable by the standards of deductive logic is always truth-preserving; that is, it never takes one from truths to falsehood. Reasoning that is truth-preserving is said to be valid (p. 1-2).

As if the preceding weren't complicated enough, these writers are focusing primarily on one type of logic: first-order logic. There are actually various other types of logic, but we won't be going into those. We'll only focus on first-order logic, and so I'll drop the "first-order" and just say "logic."

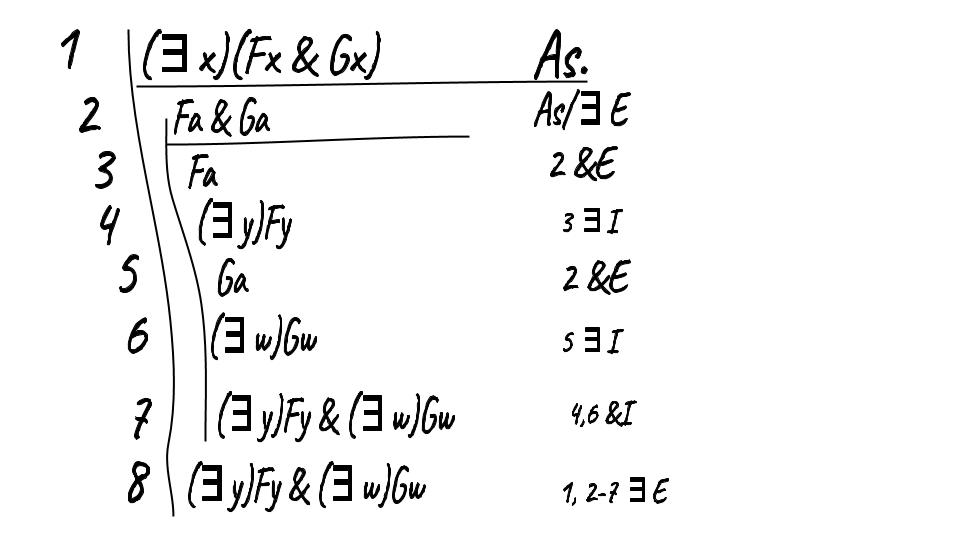

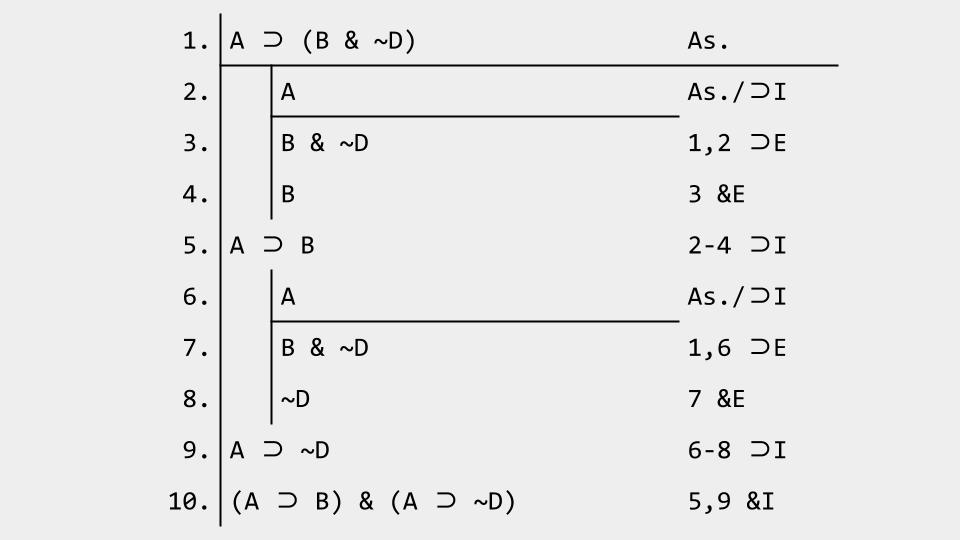

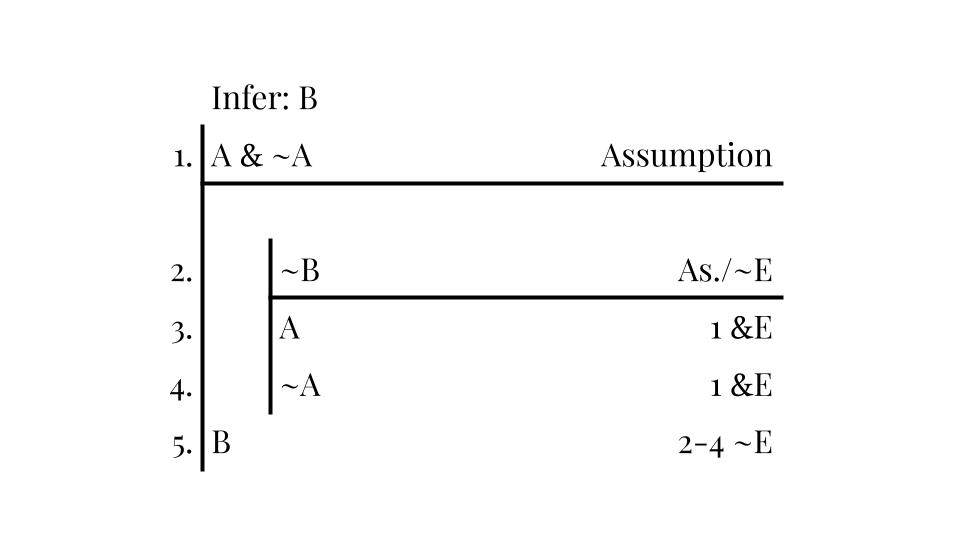

At this point, you might be getting a little nervous. Don't worry. I've paced this class so that it is doable if you put in the work. In other words, it is a challenging class, but if you do all the assignments diligently at the time that they are assigned, you'll be fine. In fact, many students are impressed with themselves at the end of the course. If all goes according to plan, you should be able to read the deduction below.

Important Concepts

Take a moment to go through the slides below. Make sure to memorize the important concepts of every lesson. It is on these concepts that the next lesson will build. Then the concepts from tomorrow will be the foundation for the concepts after that. Do not fall behind!

Also, although certain concepts won't make total sense right now, it's important to read the language used here to start to give your mind the tools it needs for understanding these concepts later. In chapter 1 of A Mind for Numbers, Barbara Oakley describes this activity as giving yourself "mental hooks" on which to hang tough concepts later. You'll be introduced to these tough concepts more formally later, when you are doing more focused thinking and learning.

Some comments

Logical consistency will be a cornerstone of everything we will be doing. We will define important concepts in this course, such as the notion of validity, in terms of logical cosistency, so it is important that you understand it. Logical consistency only means that it's possible that all the sentences in a set are true at the same time. That's it. Don't equate consistency with truth. Two statements can be consistent while being false. For example, here are two sentences.

- "The present King of France is bald."

- "Baldness is inherited from your mother's side of the family."

"The present King of France is bald" is false. This is because there is no king of France. Nonetheless, these sentences are logically consistent. They can both be true at the same time, it just happens that they're not both true.2

Arguments will also be central to this class. Arguments in this class, however, will not be heated exchanges like the kind that couples have in the middle of an IKEA showroom. For logicians, arguments are just piles of sentences that are meant to support another sentence, i.e., the conclusion. The language of arguments has been co-opted into other disciplines, such as computer science, as we shall soon see.

As you learned in the Important Concepts there are two major divisions to logic: the formal and informal branches. We will be focusing on formal logic. There are other classes that focus more on the informal aspect of argumentation, such as PHIL 105: Critical Thinking and Discourse. Classes like PHIL 105 focus on the analysis of language and the critical evaluation of arguments in everyday (natural) languages. One aspect of these classes that students tend to enjoy is the study of informal fallacies. Again, we won't be studying these here, but here's one just for fun:

Food for Thought...

On the horizon

What's ahead? I like to take a historical perspective in all my classes. I also like take a decidedly interdisciplinary approach. I consider this the best way to understand how ideas progress, how some ideas are updated and improved upon and how others are discarded. In fact, students that have taken a course with me before would be forgiven for thinking that I like history or cognitive science more than philosophy.3

Nonetheless, we will take every opportunity to look at how logic relates to other disciplines, in particular mathematics, computer science, and artificial intelligence. We will also begin at the very origins of logic and tell its full story, sort of... The origins of logic are actually a complicated issue. One type of logic originated in India in the 6th century BCE. This logic had discernible syntax rules and was used to answer questions about the physical universe and the nature of knowledge. In China also, beginning around the 5th century BCE, a system of logic was devised. However, we won't be focusing on these.4

In the 5th and 4th centuries BCE, in Greece, something truly special was happening. This is one of the reasons why we'll be focusing on the logic that originated in Greece, although there are others (see footnote 4). Here a new way of thinking was being developed. A student was allowed to disagree with his teacher, to provide criticisms to their argument, and to develop their own theory. This is a middle way between dogmatic obedience and crude deprecation. It was this way of thinking, when institutionalized centuries later, that gave science the property of self-correction. Although a full analysis of what was going on during the "Greek miracle" is far beyond this course, I can tell you that this period is undoubtedly influential on the rest of human history (see Rovelli 2017, chapter 1; see also Lloyd 1999), as you'll come to see.

Footnotes

1. I might add that in France, due to the influence of one of the greatests mathematicians of the 20th century David Hilbert, some educational psychologists pushed for teaching mathematics from formal rules first, as opposed to the more intuitive practical way of learning about numbers by counting objects, adding piles together, subtracting some from the pile, dividing up piles, etc. Thankfully, this episode is over (see Dehaene 1999: 140, 241-42).

2. Contrary to popular belief, apparently baldness is not all your mother's fault. In the very least, smoking and drinking have an effect.

3. Philosophers have criticized me for not being enough of a philosopher. What I really attempt to do is use the tools and findings of Cognitive Science (and other relevant disciplines) to try to solve philosophical problems. In a sense, I believe most philosophical problems are actually empirical problems that haven't been framed properly.

4. There are many reasons for focusing on the Western Tradition, and so I'd like to preempt any accusations that the college is being Eurocentric in requiring this particular class. It is true, of course, that Europeans and the descendants of Europeans had come to dominate much of the world by the middle of the 20th century, whether it be culturally, economically, politically, or militarily (as in the numerous American occupations after World War II). It's also true that European standards (of reasoning, of measuring, of monetary value, etc.) have become predominant in the world as part of a general (if accidental) imperial project (see chapter 21 of Immerwahr's 2019 How to Hide an Empire). It's also the case that it is during this time period of European dominance during which public education was being formulated and put into practice, and so there is a tradition in universities of following this model and emphasizing the European role. In fact, some scholars (e.g., Loewen 2007, Aoki 2017) have hypothesized that the function of education is at least partially to indoctrinate students into the dominant culture, typically one endorsed by the elites. All of this is both true and irrelevant for why we are focusing on the Western tradition in this course. As it turns out, only Aristotle's logic focused on logical force. In Shenefelt and White's (2013) If A then B, the authors juxtapose Aristotle’s syllogistic and Indian and Chinese logic. Indian logic was apparently preoccupied with rhetorical force more than anything. It featured repetition and multiple examples of the same principle so that it's primary function was persuasion. Chinese logic apparently did study some fallacious reasoning, but (strangely) never its counterpart (i.e., validity). It was only Aristotle’s logic that focused on logical force, the study of validity itself. The Greeks wanted to know why an argument’s premises force the conclusion on you. In short, only Aristotle studies logical force unadulterated by rhetorical considerations, and this led to major historical events centuries (and millenia) later. The interested student should check out Shenefelt and White (2013), in particular chapters 1, 2, and 9.

Validity

Knowledge of the fact differs from knowledge of the reason for the fact.

~Aristotle

Origins...

Aristotle is the most famous student of Plato. He was prolific, writing on all major topics of inquiry established during the time that he was alive. Like his teacher, he also founded a school: the Lyceum. He was the tutor of Alexander the Great, son of Philip II. He was also the founder of the first school of Logic, a discipline he created and which is still studied when learning philosophy, computer science, and mathematics. You'll soon come to see why.1

"Aristotle was born in 384 BC, in the Greek colony and seaport of Stagirus, Macedonia. His father, who was court physician to the King of Macedonia [Amyntas III, father of Philip II], died when Aristotle was young, and the future founder of logic went to live with an uncle... When Aristotle was 17, he was sent to Athens to study at Plato’s Academy, the first university in Western history. Here, under the personal guidance of the great philosopher Plato (427-347 BC), the young Aristotle embarked on studies in every organized field of thought at the time, including mathematics, physics, cosmology, history, ethics, political theory, and musical theory... But Aristotle’s favorite subject in college was the field he eventually chose as his area of concentration: the subject the Greeks had only recently named philosophy… [And] Aristotle would write the very first history of philosophy, tracing the discipline back to its origins in another Greek colony, the bustling harbor town of Miletus... Thales of Miletus (625-546 BC) first rejected the customary Greek myths of Homer and Hesiod, with their exciting stories of gods, monsters, and heroes proposed as explanations of how the world and all that is in it came to be, and offered instead a radically new type of explanation of the world” (Herrick 2013: 8-9; interpolation is mine).

As you can see from the quote above (and as we mentioned last time), Aristotle was around during a time in which a new way of thinking was being devised. No, I'm not just talking about how this "super cool" new discipline of Philosophy was coming about. This so-called "Greek Miracle" is truly interdisciplinary and a historical landmark in intellectual history. Surprisingly, though, scholars have a hard time characterizing exactly what made this period so special. I'll give you two theories on this topic.

As we saw last time, some scholars stress the central role that debating took. Physicist Carlo Rovelli, when giving his history of quantum gravity, starts back in Ancient Greece, with the ideas of Democritus who, under the tutelage of Leucippus, develops atomic theory: things are composed of smaller objects which are indivisible.2 Rovelli stresses that students were allowed to debate their teachers, and that ideas had to be defended with more rigor. Historian of mathematics Luke Heaton agrees with Rovelli. Heaton notes the differences in the approaches taken by different cultures in the field of mathematics. For example, the ancient Egyptians performed calculations and inferences exactly in the ways of their ancestors. In fact, they literally believed this to be a matter of life and death. The whole cosmic harmony of their god Ma’at "could turn to chaos and violence if the ruler or his people did not adhere to their traditions and rituals." In other words, the Ancient Egyptians did things the way they had always been done, and could not even imagine of attempting new methods of mathematics. In contrast, however, Greeks debated mathematical truths. They emphasized the rigorous articulation of logical principles in argumentation (see Heaton 2017: 34-5; see p. 34 for quote).

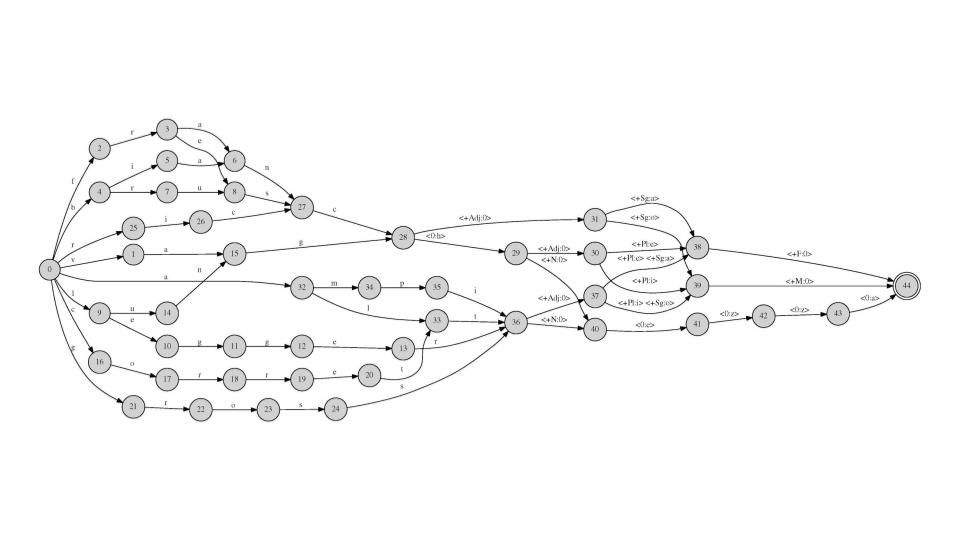

A gate-level circuit

(see footnote 1 for more info).

A second theory comes from classicist Pierre Vernant. Vernant argues that, beginning in the sixth century BCE, there was a new "positivist" type of reflection concerning nature. This is to say that thinkers weren't satisfied with divine explanations any longer. One example that Vernant gives is that thinkers were no longer satisfied with explaining cosmogenesis through sexual unions (Vernant 2006: 219). And so they emphasized theories that were more testable and arguable. They sought ways of explaining the world that were amenable to examination. According to Vernant, the special aspect of Greek thought isn't just that they could argue; it's that they would limit their argumentation to domains where argumentation might lead to a resolution. In other words, the Greeks moved away from superstition and supernatural explanations (where one explanation is just as good as another) towards explaining reality in physical terms (which is a step towards modern science; see Vernant 2006: 371-80).

Whatever the right way of characterizing the Greek miracle might be, it's clear that there was something special going on. And Aristotle's ideas are a part of this tradition. Sure, Aristotle had some ideas that were also very very wrong. In fact, some of his ideas actually impeded progress, as in physics. And we can talk about those if you want. But paired with that must be the acknowledgement that some of his ideas are nothing short of intellectual breakthroughs, breakthroughs that were expanded and improved upon by others, breakthroughs that shaped the modern world. For example, Aristotle's works on botany and zoology taught us how to systematize, an important step in scientific classification. Most important, I think, and the subject of this course, is his development of logic. It appears that, although logical principles were routinely used in argumentation, no one had sat down to codify them. Aristotle did.

“[W]e can say flatly that the history of [Western] logic begins with the Greek Philosopher Aristotle... Although it is almost a platitude among historians that great intellectual advances are never the work of only one person (in founding the science of geometry Euclid made use of the results of Eudoxus and others; in the case of mechanics Newton stood upon the shoulders of Descartes, Galileo, and Kepler; and so on), Aristotle, according to all available evidence, created the science of logic absolutely ex nihilo [out of nothing]” (Mates 1972: 206; interpolations are mine).

Important Concepts

Distinguishing Deduction and Induction

As you saw in the Important Concepts, I distinguish deduction and induction thus: deduction purports to establish the certainty of the conclusion while induction establishes only that the conclusion is probable.3 So basically, deduction gives you certainty, induction gives you probabilistic conclusions. If you perform an internet search, however, this is not always what you'll find. Some websites define deduction as going from general statements to particular ones, and induction is defined as going from particular statements to general ones. I understand this way of framing the two, but this distinction isn't foolproof. For example, you can write an inductive argument that goes from general principles to particular ones, like only deduction is supposed to do:

- Generally speaking, criminals return to the scene of the crime.

- Generally speaking, fingerprints have only one likely match.

- Thus, since Sam was seen at the scene of the crime and his prints matched, he is likely the culprit.

I know that I really emphasized the general aspect of the premises, and I also know that those statements are debatable. But what isn't debatable is that the conclusion is not certain. It only has a high degree of probability of being true. As such, using my distinction, it is an inductive argument. But clearly we arrived at this conclusion (a particular statement about one guy) from general statements (about the general tendencies of criminals and the general accuracy of fingerprint investigations). All this to say that for this course, we'll be exclusively using the distinction established in the Important Concepts: deduction gives you certainty, induction gives you probability.

In reality, this distinction between deduction and induction is fuzzier than you might think. In fact, recently (historically speaking), Axelrod (1997: 3-4) argues that agent-based models, a new fangled computer modeling approach to solving problems in the social and biological sciences, is a third form of reasoning, neither inductive nor deductive. As you can tell, this story gets complicated, but it's a discussion that belongs in a course on Argument Theory.

Food for Thought...

Alas...

In this course we will only focus on deductive logic. Inductive logic is a whole course unto itself. In fact, it's more like a whole set of courses. I might add that inductive reasoning might be important to learn if you are pursuing a career in computer science. This is because there is a clear analogy between statistics (a form of inductive reasoning) and machine learning (see Dangeti 2017). Nonetheless, this will be one of the few times we discuss induction. What will be important to know for our purposes is only the basic distinction between the two forms of reasoning.

Assessing Arguments

Validity and soundness are the jargon of deduction. Induction has it's own language of assessment, which we will only briefly cover next time. These concepts will be with us through the end of the course, so let's make sure we understand them. When first learning the concepts of validity and soundness students often fail to recognize that validity is a concept that is independent of truth. Validity merely means that if the premises are true, the conclusion must be true. So once you've decided that an argument is valid, a necessary first step in the assessment of arguments, then you proceed to assess each individual premise for truth. If all the premises are true, then we can further brand the argument as sound.4 If an argument has achieved this status, then a rational person would accept the conclusion.

Let's take a look at some examples. Here's an argument:

- Every painting ever made is in The Library of Babel.

- “La Persistencia de la Memoria” is a painting by Salvador Dalí.

- Therefore, “La Persistencia de la Memoria” is in The Library of Babel.

At first glance, some people immediately sense something wrong about this argument, but it is important to specify what is amiss. Let's first assess for validity. If the premises are true, does the conclusion have to be true? Think about it. The answer is yes. If every painting ever is in this library and "La Persistencia de la Memoria" is a painting, then this painting should be housed in this library. So the argument is valid.

But validity is cheap. Anyone who can arrange sentences in the right way can engineer a valid argument. Soundness is what counts. Now that we've assessed the argument as valid, let's assess it for soundness. Are the premises actually true? The answer is: no. The second premise is true (see the image below). However, there is no such thing as the Library of Babel; it is a fiction invented by a poet. So, the argument is not sound. You are not rationally required to believe it.

Here's one more:

- All lawyers are liars.

- Jim is a lawyer.

- Therefore Jim is a liar.

You try it!5

Decoding Validity

Closing Comments

As you might've noticed above, people need some time to calibrate their concept of logical consistency. It just means the statements can be true at the same time. Don't let blatantly false statements, especially politically charged ones, trip you up. If you do let yourself be tricked in this way, you can expect to have some problems on my test. You've been warned.

Also, be sure to distinguish between validity and soundness, a topic also covered in the video above. Validity is about the logical relationship between premises and conclusion, namely that the premises fully support the conclusion. Soundness is validity plus the premises actually being true. Finally, both validity and soundness are properties of arguments. Don't forget! Again, you've been warned.

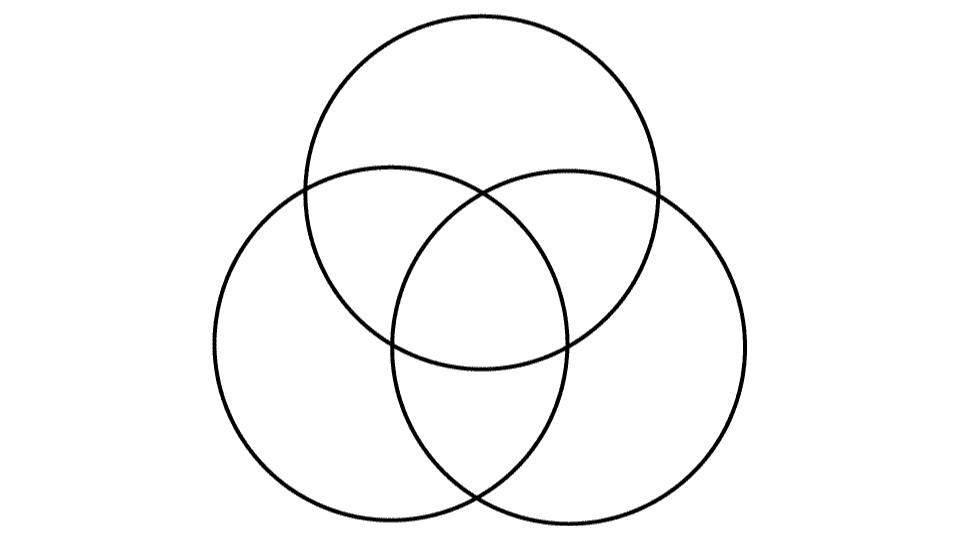

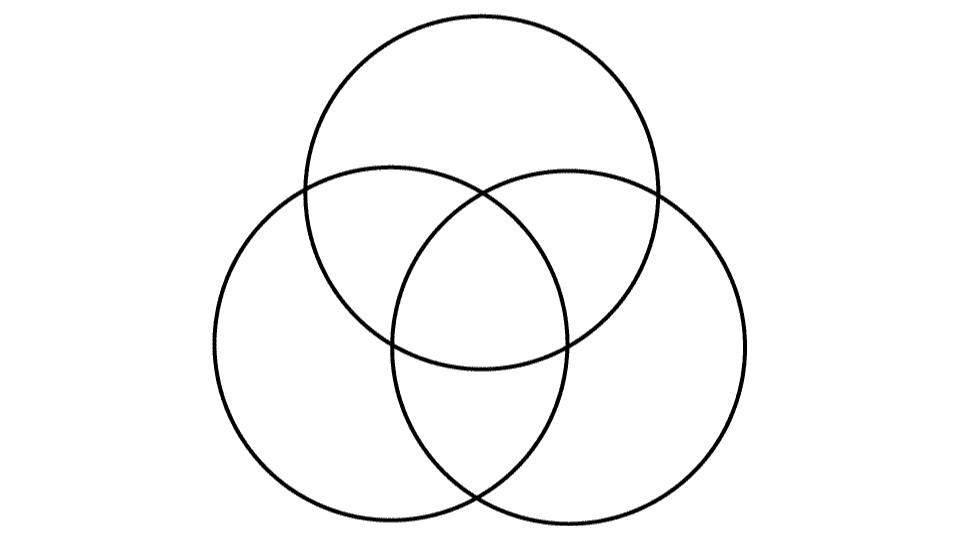

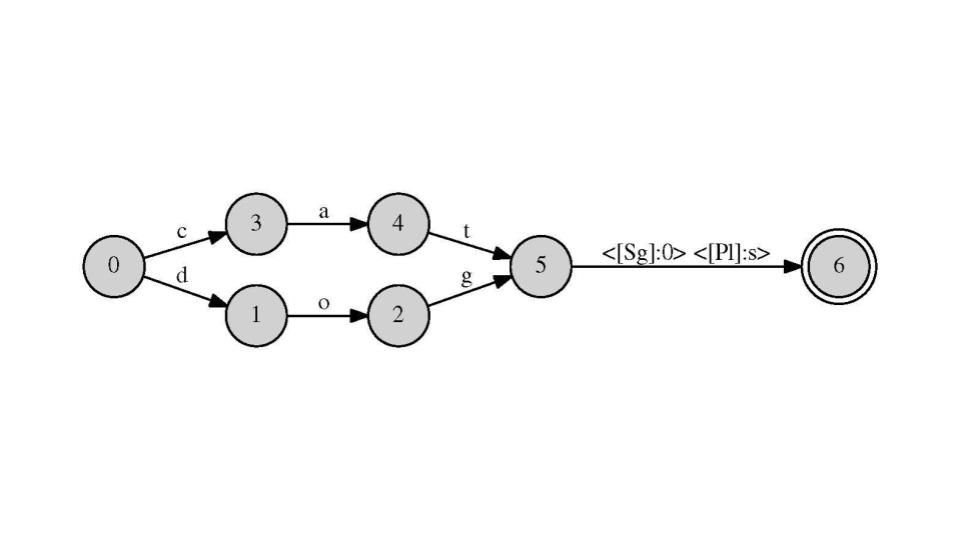

Lastly, you might've noticed pictures of circles in this lesson. There'll be more to come. Why? You'll see...

FYI

Homework!

- Validity and Soundness Handout

- In this assignment, you will use the Imagination Method to assess arguments for validity; that is to say, you will simply think about the argument and attempt to figure out if it is valid or not.

- The Logic Book (6e), Chapter 1 Glossary

Footnotes

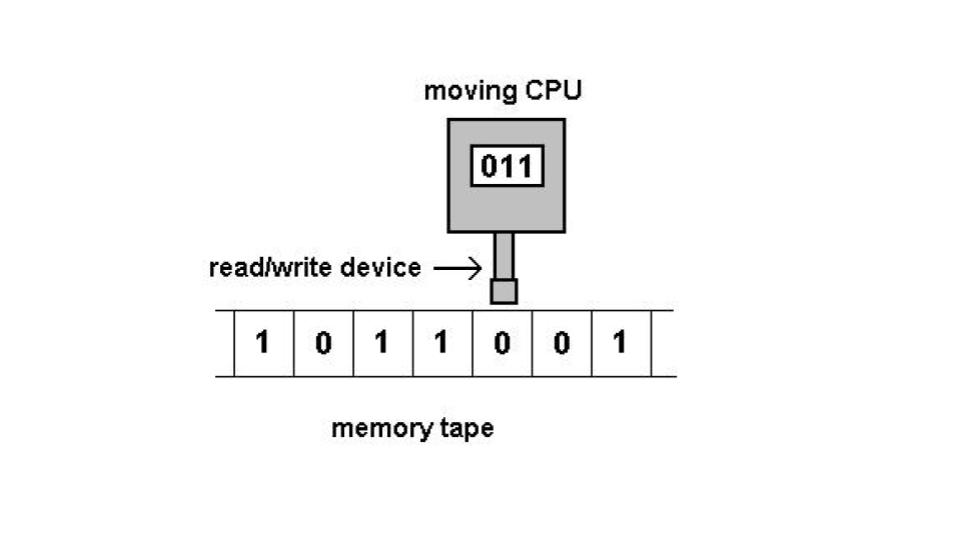

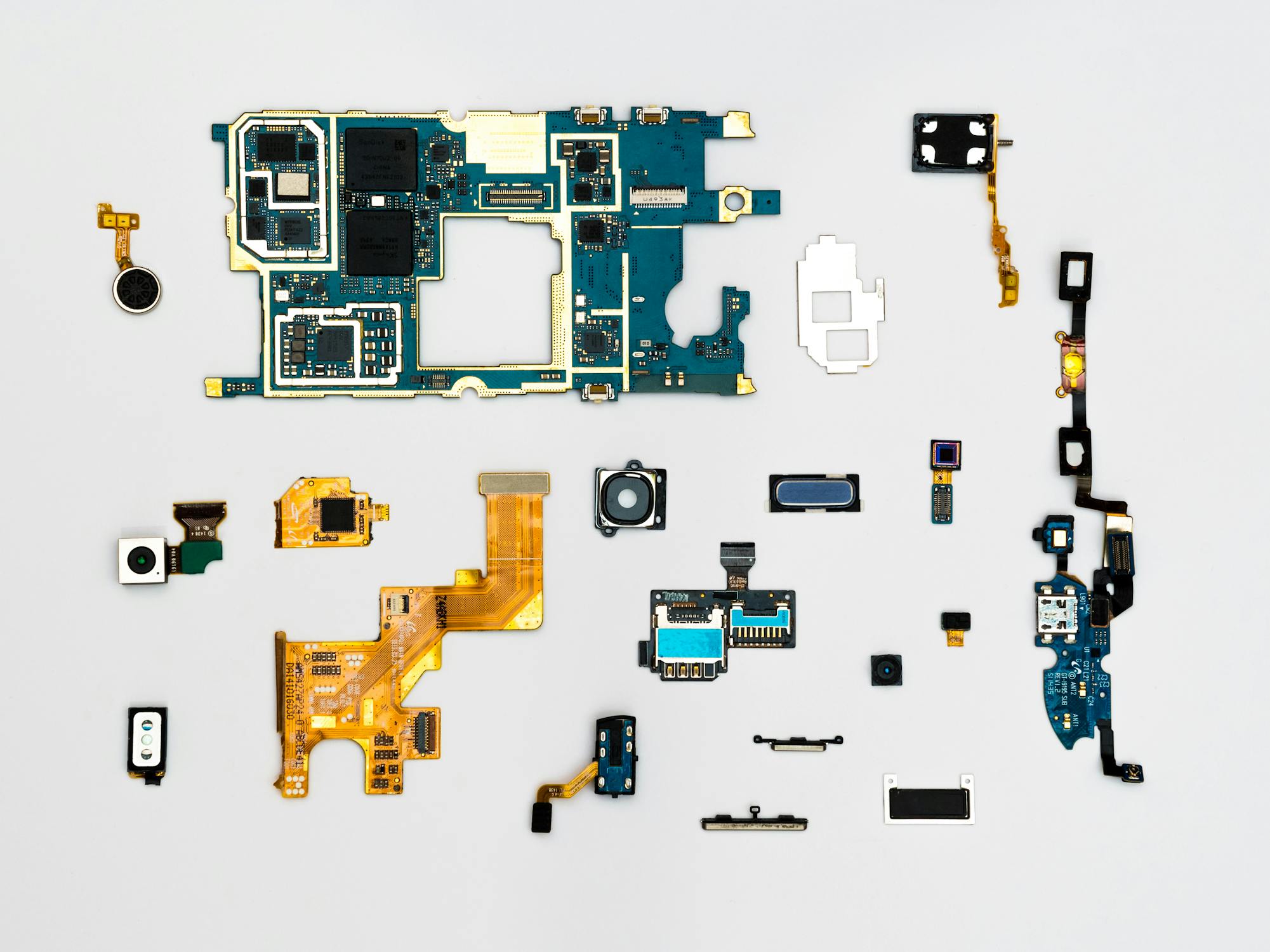

1. A basic building block of computers is a logic gate, which is functionally the same as some of the truth-tables we'll be learning in Unit II. Through a combination of logic gates you can build gate-level circuits, and out of gate-level circuits you build modules, such as the arithmetic logic unit (a unit in a computer which carries out arithmetic and logical operations.) Eventually, all the elements of a module were forced onto a single chip, called an integrated circuit, or an IC for short (see Ceruzzi 2012: 86-90). In the image you can see a gate-level circuit.

2. Not to get into theoretical physics here, but some students make the erroneous inference that because we split the atom, that means atomic theory is false. Not at all. It means, among other things, that physicists used the label "atom" too soon; they misapplied it by using in on objects that actually are divisible. But more importantly, quantum theory leads to the conclusion that there really is a lower limit to the size of things in the world, and they can't get any smaller than that lower limit. In a sense, Democritus was right. Rovelli (2017) refers to this granularity as one of the lessons learned from quantum theory.

3. It is important to note that different thinkers distinguish between deduction and induction in different ways (see Wilbanks 2010). Depending on how you distinguish these two, abduction is either a third form of reasoning or a type of induction. I'm agnostic on this matter. However, one of the main books I'm using in building this course is Herrick (2013), and it is his way of characterizing deduction/induction that we will be using. On the account that we're using, there's only deduction and induction, with abduction being a form of induction (see chapter 33, titled "Varieties of Inductive Reasoning", of Herrick 2013 to learn about his conception of abduction, also known as "inference to the best explanation").

4. Another common mistake that students make is that they think arguments can only have two premises. That's usually just a simplification that we perform in introductory courses. Arguments can have as many premises as the arguer needs.

5. This argument is valid but not sound, since there are some lawyers who are non-liars (although not many).

Categorical Logic 1.0

It requires a very unusual mind to undertake the analysis of the obvious.

~Alfred North Whitehead

Recap

As you know by now, validity is an important concept in this course. Equally important, in so far as they help us wrap our minds around validity, are the notions of logical truth and logical falsity. Recall that a sentence is logically true if and only if it is not possible for the sentence to be false. Here are two examples of logically true sentences:

- "Either Yuri is a tricycle or Yuri is not a tricycle."

- "It’s false that I am a banana and I am not a banana."

In the first sentence, it is obvious that either it is the case that 'Yuri' refers to a tricycle or it's not. That's just it. There are no other options. Since or-sentences, formally called disjunctions, are true as long as one of the disjuncts is true, then it's true no matter what. In the second sentence, we have the denial of a contradiction. If I were to tell you that "I am a banana and I am not a banana", you'd know two things. 1. I'm probably losing my mind; and 2. it is not possible to both be a banana and not be a banana. This is a contradiction. It's literally not possible for this sentence to be true. In other words, this is a logically false sentence. But notice that the example bulleted above is the negation of this contradiction. This means that, in effect, the sentence is saying that this contradiction is false, which is true. It will always be true that a contradiction is false. This is why we label the full sentence, "It’s false that I am a banana and I am not a banana", logically true.

The world, of course, is not this simple. If only it were that all sentences were either always true or always false. In fact, most sentences are logically indeterminate. Put another way, the truth or falsity of a logically indeterminate sentence does not hinge on the logical words, like "not" and "or", that it contains. In other words, logically indeterminate sentences are neither logically true nor logically false.

The last concept we'll recap here is that of logical entailment, for which we will use the symbol "⊨". This symbol, which is read as 'double turnstile', simply asserts that given a set Γ of sentences, Γ logically entails a sentence if and only if it is impossible for all the members of Γ to be true and that sentence false. I know this sometimes looks scary to students. Let me try that again.

Γ is simply the Greek letter gamma. It stands for a set of sentences like, {“Ann likes swimming”, “Bob likes pudding”, “Carlos hates Dan”}.1 This set (Γ) entails the following sentence: “Carlos hates Dan”. Why? Well clearly because it is a member of the set. It's right in there. We will learn about more complex methods of entailment, but this is the basic idea. A set of sentences logically entails a sentence if and only if it is impossible for all the members of the set to be true and that sentence false. Written out, it looks like this:

{“Ann likes swimming”, “Bob likes pudding”, “Carlos hates Dan”} ⊨ “Carlos hates Dan”

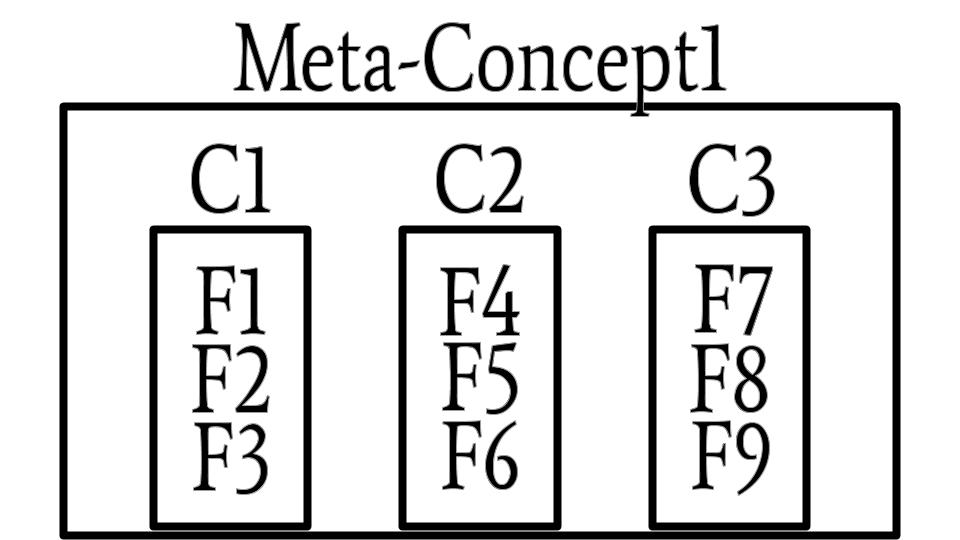

Special cases of logical concepts

The Jargon of Induction

Before delving into deduction for good, we should briefly look at the language used to assess inductive arguments. An inductive argument is said to be strong when, if the premises are true, then the conclusion is very likely true. An inductive argument is cogent when a. it is strong, and b. it has true premises.

During the middle of the 19th century there were great advancements in formal deductive logic (which will be covered in this course), as well as a revival in inductive logic spearheaded by the British philosopher John Stuart Mill (pictured below), who is mostly known for his Utilitarian system of ethics. All this to say that the study of inductive reasoning is an extremely worthwhile task. As previously mentioned, inductive logic will unfortunately not be covered in this course, but Mill’s collected works, including his A System of Logic Ratiocinative and Inductive are available online (see also Hurley 1985, chapter 9).

Storytime!

Previously, I had stated that Aristotle devised the science of logic ex nihilo. This is true, since no one in the Western tradition had explicitly worked out the study of logical concepts the way Aristotle did. This is not to say, however, that thinkers before this time weren't making use of logical concepts in their argumentation. All of us naturally use logical language. That is, we tend to make use of logical words such as "and", "or", "if...then", etc. Developmental and comparative psychologist Michael Tomasello even has a theory as to when sapiens developed the capacity to use logical language, a step which required new psychological capacities (see Tomasello 2014: 107).

We all even naturally make use of the important concept of logical consistency. Simply think about a time when someone was blatantly contradicting themselves. It bothered you not merely because they were probably lying, but because inconsistencies (typically) trigger some mental discomfort, alerting us that something is wrong.

So it shouldn't surprise us that philosophers before Aristotle were making use of logical concepts even without the formal study of logic having been initiated. I could list basically any philosopher here, but, in an introductory course, there is no better example than Socrates.

Statue of Xenophon.

Most of what we know about Socrates comes from his two most famous students: Plato and Xenophon. From what we can piece together, Socrates clearly saw the discipline of philosophy as a way of life. He taught, contrary to the traditions of his time, that virtue wasn’t associated with noble birth; rather, it is something that is learned, a form of moral wisdom. His conversations with others, at least according to Plato, would help people search for the right answers to life's most fundamental question: how should I live? Repeatedly, Socrates stressed the need to develop moral strength, the requirement that we use our powers of reason to reflect on our lives and the lives of those around us, and, ultimately, that we devote ourselves to wisdom, justice, courage, and moderation.

In many cases, Socrates first had to get the people he had conversations with to realize a deficiency in their view. Only then can he help them move towards more sound positions. In one of Plato's dialogues, Euthyphro, Socrates discusses the nature of piety with Euthyphro, the latter of which is mired in inconsistencies. This dialogue is sometimes painful to read since Euthyphro is clearly reeling. Socrates, as a character in Plato's dialogue, is making use of logical techniques for showing inconsistencies. All this, remember, was before the science of logic had been explicitly begun. This is a testament to the intellectual profundity of Socrates, a trait he was able to impart on his students, Plato and Xenophon.

Important Concepts

Clarifications

As you learned in the Important Concepts above, Aristotle focused on the logical form of sentences and realized that there are only four types of categorical sentences. I'll reproduce them here:

The Four Sentences of Categorical Logic2

The universal affirmative (A): All S are P.

The universal negative (E): No S are P.

The particular affirmative (I): Some S are P.

The particular negative (O): Some S are not P.

Let's make some clarifications. Aristotle believed that all declarative sentences can be put into this form. Of course, there are other types of sentences with other linguistic functions, e.g., exclamations. But only declarative sentences can be true or false, and so those are the ones we are concerned with. Remember, logic is concerned with truth-preservation. Exclamations are neither true nor false. It would be a category mistake to label a sentence like "Golf sucks!" or "SUSHI!!!" or "¡Vamos Pumas!" as either true or false. They are more so expressions of emotion on the part of whoever uttered the sentence. So, declarative sentences are what Aristotle had in mind when he was thinking of logical form.

Also, you must've noticed in the Important Concepts a clarification about the word "some". This is important to learn now, so you don't make mistakes later on. "Some" means "at least one" to logicians. Students often tell me that "some", to them, means "more than one". While you're engaging in assessing arguments for validity, though, you must use the logician's sense of "some".

Here's a related issue. What do you intuit if someone says, "There are some tamales that are spicy"? You might interpret this as saying both "There are some tamales that are spicy" and "There are some tamales that are not spicy". This might especially be the case if you don't like spicy food. You hear the first sentence as implying the second one. However, in this class, we will use a logically rigorous definition of implication, which we won't really dive into until Units III and IV. The long and short of it is that if you see a sentence like "There are some tamales that are spicy", this does not imply the sentence "There are some tamales that are not spicy". The only time you can assume that there are tamales that are not spicy is if you are given the sentence "There are some tamales that are not spicy".

Standard Form in Categorical Logic

With the clarifications out of the way, let's practice putting sentences into their proper logical form. We will refer to this as the standard form for sentences in categorical logic. The rules are simple. Make sure that the premises/conclusion are in the following order:

- The quantifier (which is either "all", "no", or "some")

- Subject class (led by a noun)

- Copula (i.e., the word "are")

- Predicate class (also led by a noun)

Here are various examples. After a few of them, try to rewrite them yourself before you see how I put them into standard form. It's ok if our subject and predicate classes aren't the same, since we are likely to use different nouns to start off our categories.

/151081254-57c79ea93df78c71b681f41d.jpg)

Three Types of Categorical Reasoning

Although Aristotle's categorical reasoning has now been formalized into a robust mathematical framework (see Marquis 2020), Aristotle began with just three types of categorical reasoning. First, an immediate inference is an argument composed of exactly one premise and one conclusion immediately drawn from it. For example:

- All Spartans are brave persons.

- So, some brave persons are Spartans.

A mediate inference is an argument composed of exactly two premises and exactly one conclusion in which the reasoning from the first premise to the conclusion is “mediated” by passing through a second premise. This type of argument is also called a categorical syllogism. For example:

- All goats are mammals.

- All mammals are animals.

- Therefore, all goats are animals.

Lastly, a sorites is a chain of interlocking mediate inferences leading to one conclusion in the end. For example:

- All Spartans are warriors.

- All warriors are brave persons.

- All brave persons are strong persons.

- So, all Spartans are strong persons.

Looking ahead

The whole point of deductive logic at this early stage was to see which conclusions necessarily followed from their premises. In other words, it was simply the study of validity. Hence, Aristotle devised different ways for assessing the three types of categorical reasoning for validity. These will occupy us for the rest of this unit. We will begin with immediate inferences, or one-premise categorical arguments. After that, we will move on to mediate inferences, which are the main challenge of this unit. In fact, mediate inferences will be the reason why you might dream of circles in the nights to come. After that, we will take a brief look at assessing sorites for validity.

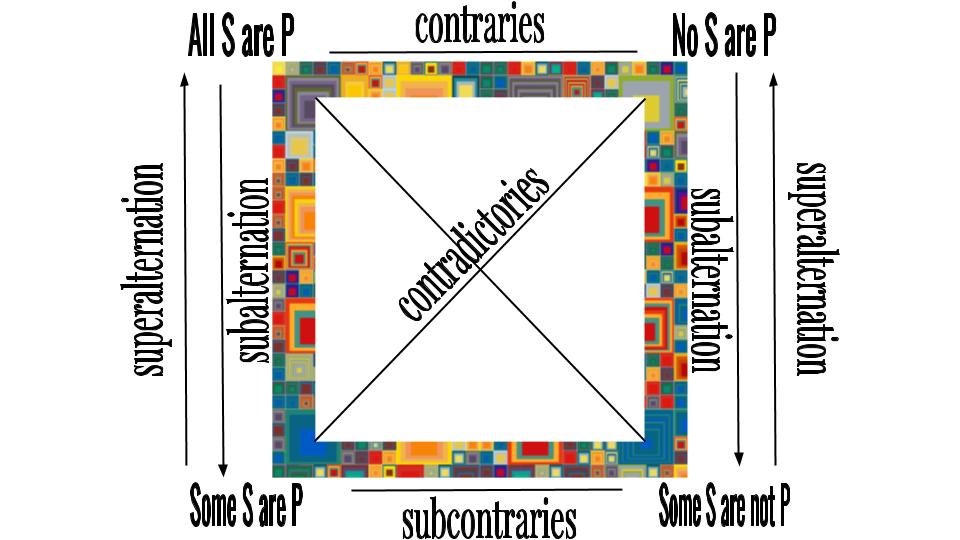

But before we can run, we have to crawl. We'll begin with immediate inferences. Aristotle developed two methods for assessing these one-premise arguments: 1. the Square of Opposition and 2. the laws of conversion, obversion, and contraposition. We will focus on the Square of Opposition. This will require that we learn how to use a "paper computer". Stay tuned.

FYI

Homework!

Advanced Material—

- Book Chapter: Merrie Bergmann, James H. Moor, Jack Nelson, and James Moor, The Logic Book, Chapter 1

Footnotes

1. Note that we use the "{" "}" symbols to capture the content of a set of sentences.

2. There is a reason for why the four sentences of categorical logic are labeled "A", "E", "I", and "O". The universal and particular affirmative are labeled "A" and "I" since these are the first two vowels in the Latin word affirmo, which means "I affirm". The universal and particular negative are "E" and "O" for the vowels in the Latin word nego, which means "I deny".

Homework for Unit 1, Lesson 3: Categorical Logic 1.0

Note: Click here for a PDF of this assignment.

Assessing Categorical Arguments for Validity: Intuitive Method Edition

Indicate whether the following arguments are valid or invalid. Use the Imagination Method.

All philosophers are lovers of truth. No lovers of truth are closed-minded people. Thus, no philosophers are closed-minded people.

No soldiers are rich. No rich persons are poets. Hence, no soldiers are poets.

No scientists are poets. Some scientists are logicians. Therefore, some logicians are not poets.

Some actors are sculptors. Some poets are not actors. So, some poets are not sculptors.

Complete the following arguments in such a way such that each is valid:

All cats are _______.

Some _______ are pets.

So, some _______ are _______.

All _______ are _______.

No mammals are _______.

So, no _______ are _______.

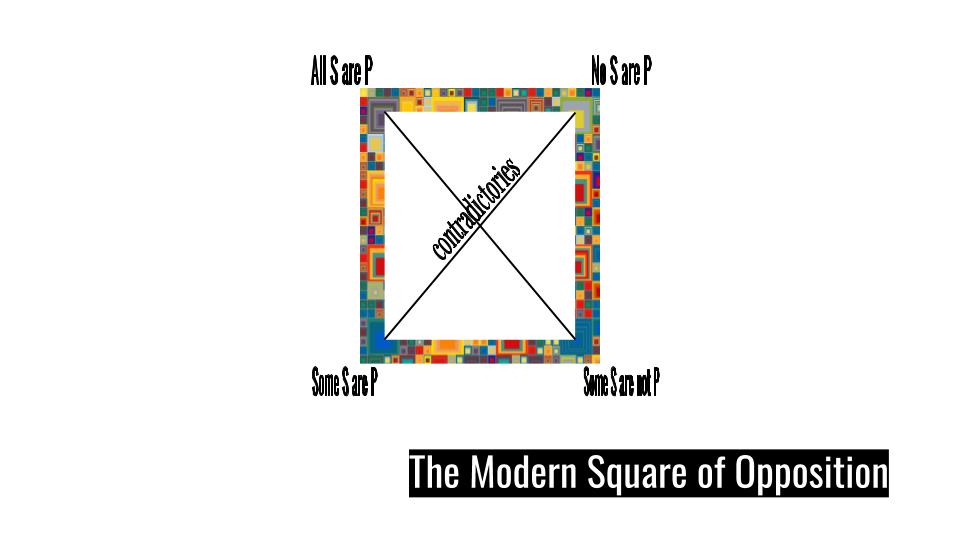

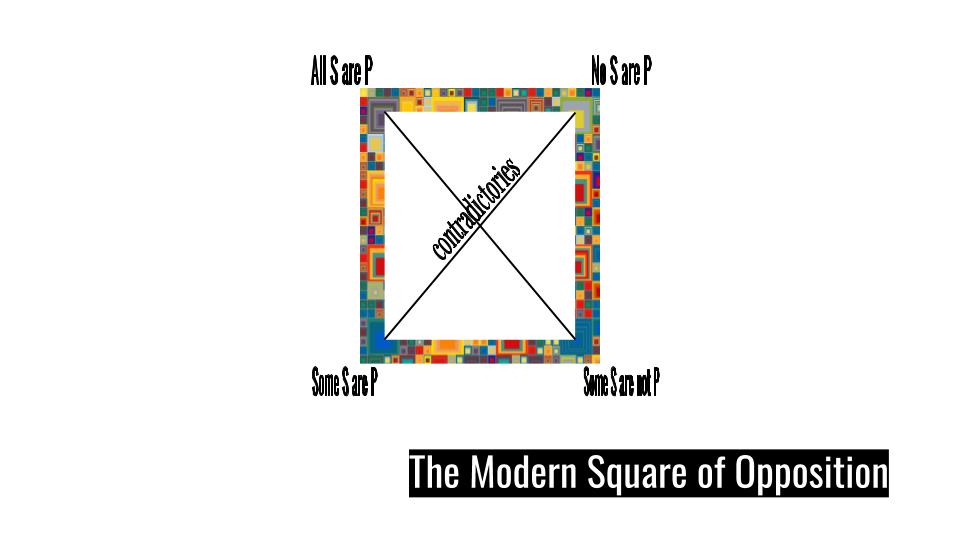

Lastly, memorize the following terms, as well as the Square of Opposition diagram below:

Two statements are contradictories if and only if they cannot both be true simultaneously and they also cannot both be false simultaneously.

Two statements are contraries if and only if they cannot both be true simultaneously, but it is logically possible both are false.

Two statements are subcontraries if and only if they cannot both be false simultaneously but it is logically possible that both are true.

One statement P is a subaltern of another statement Q if and only if P must be true if Q is true.

One statement P is a superaltern of another statement Q if and only if P must be false if Q is false.

The Square of Opposition

No, no, you're not thinking; you're just being logical.

~Niels Bohr

Level I: Immediate Inferences

So far we've only assessed arguments using the Imagination Method, basically thinking through premises and intuiting if the conclusion necessarily follows. Such informal methods are helpful with simple arguments, but their usefulness would vanish in more complicated cases. More formal, rigorous methods had to be devised. We'll begin, as promised, with short one-premise arguments.1

For reference, here are the steps for using the square of opposition.

- Write out the argument.

- Assess which type of sentence of categorical logic the statement is and write the letter beside the premise.

- Write the truth-value (either T or F) next to the sentence letter of both the premise and the conclusion. (Note: If the sentence is just asserted, that means it’s true.)

- Use the different logical relations in the square of opposition—such as superalternation, subalternation, etc.—to discover what the truth-value of the premise implies. In other words, Use the square of opposition to figure out the truth-value of the rest of the sentences given the premise. But remember(!), use ONLY the premise.

- Check that the truth-value of the conclusion matches the truth-value for the relevant sentence-type on your square of opposition. If they do match, the argument is valid; if not, it is invalid.

FYI

Assessing Categorical Arguments for Validity: Immediate Inferences Edition

Directions: Use the Traditional Square of Opposition to check if the following one-premise arguments are valid or invalid.

Note: Some of these statements may first have to be put into standard form.

- Some cookie-eaters are cookie monsters. So it is false, that no cookie-eaters are cookie monsters.

- It is false that some cookies are turnips. So, no cookies are turnips.

- It is false that some cookies are not delicious. So, it is false that no cookies are delicious.

- It is false that all cookies are metallic. So, it is true that some cookies are metallic.

- Some cookies are not splurps. So, no cookies are splurps.

- Some cookie monsters are cookie lovers. So, all cookie monsters are cookie lovers.

- It is false that no dogs are cookie eaters; hence, it is false that all dogs are cookie eaters.

Tablet Time!

Storytime!

A caricature of

Immanuel Kant.

In the late 18th century, the German philosopher Immanuel Kant wrote that logic “has not advanced a single step, and is to all appearances a closed and completed body of doctrine... There are but few sciences that can come into a permanent state, which admits of no further alteration. To these belong logic and metaphysics” (Kant as quoted in Haack 1996: 27). We can say definitively that Kant was wrong. He was about as wrong as you could be on this topic. Not only were there improvements to the logic of his time, various other "logics" sprouted and whole new disciplines arose which had at their foundation a form of logical analysis.

Although I'm not always terribly forgiving of Kant's views, in this case, I might be more lenient. From Kant's vantage point, it really might've looked as if logic was "closed and complete", at least in the West. In Susanne Bobzien's Stanford Encyclopedia of Philosophy Entry on Ancient Logic, she writes that Aristotle's logic "was taught by and large without rival from the 4th to the 19th centuries CE" (see the section on Aristotle for quote). This is surprising because, in Aristotle's time, there existed rival forms of logical analysis. We will meet the main challenger in the next unit. A question naturally arises. How did Aristotle come to dominate?

The more one studies history, the more one realizes that ideas don't always win out because they are better. Sometimes they get a little help. And other times it's just luck. In the case of Aristotle and his logic, it's a little bit of both. The story is complicated, but here's what we can say. Nearly all of the writings of the Stoics, the founders of the school of logic that was the main competitor to that of Aristotle, are lost. How they came to be lost is why this story is so complicated. I can't tell the full story here, but at least part of it is due to the rise of Christianity and the cleansing of pagan and heterodox literature. The interested student can read Freeman's (2007) The Closing of the Western Mind: The rise of faith and the fall of reason and Nixey's (2018) The Darkening Age: The Christian Destruction of the Classical World.2

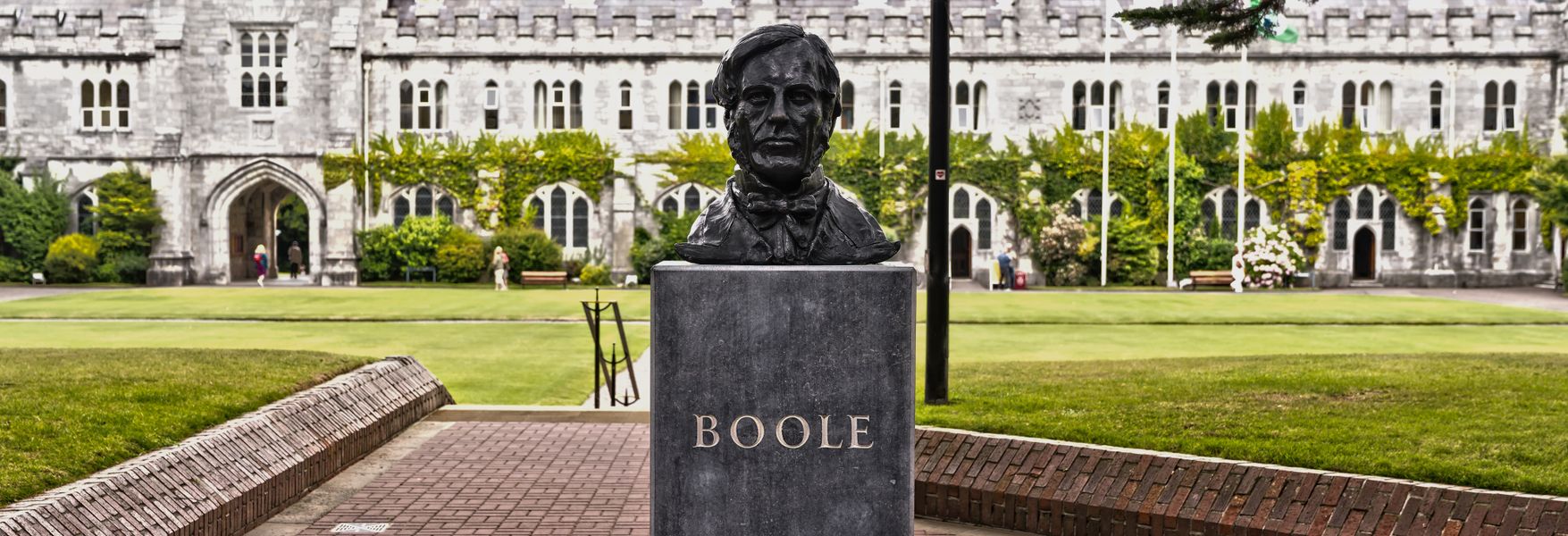

George Boole (1815-1864).

In any case, Aristotle did dominate, basically unquestioned. That is until George Boole (1815-1864) publishes The Mathematical Analysis of Logic in 1847. Boole was a mathematician that spent his brief career at Queen's College, Cork in Ireland where he has a monument (pictured above). To try to explain Boole's contributions to the modern world this early in the course will be futile. We will revisit his ideas later on in more detail. For now, we will say only two things. First, most historians of computer science agree that Boole laid down the foundations for the information age. Second, and more related to our current task, Boole made the first improvement to Aristotle's logic in over a millennium.

Hypothetically...

Consider the following proposition: “All dragons are things that are magical.”

Question: Is this proposition true or false?

You don't really want to say that it's true, do you? Wouldn't that imply that you think dragons are real? But you don't want to say it's false either. You want to say both that dragons are imaginary creatures and that, in the traditional conception of these imaginary creatures, magic is involved. There! We said it. Easy enough. But how would we represent this categorical logic?

A young Roberto Bolaño.

Imagine someone asserts the sentence "All of Roberto Bolaño's books are at least 200 pages" (see image above). Put into standard form, "All books written by Roberto Bolaño are books with more than 200 pages" is an A-type sentence. As we learned in the previous section, a true A-type sentence implies a true I-type sentence. This would be that if "All books written by Roberto Bolaño are books with more than 200 pages" is true, then "Some books written by Roberto Bolaño are books with more than 200 pages" is also true. That's basic subalternation.

This is where the trouble begins. Imagine someone asserts the sentence “All dragons are things that are magical” or "All harks are things that are very scary". Harks are indeed scary, by the way (click here). However, to say "All harks are things that are very scary" is to imply "Some harks are things that are very scary"; and remember: to logicians "some" means "at least one". So, if you say "All harks are things that are very scary" is true, you necessarily are committing to the existence of harks. Harks, though, obviously don't exist. But when we say "All harks are things that are very scary", it's pretty clear what we're trying to get at. If harks existed, then they would be very scary indeed.

So what George Boole did is stipulated that you can take either the existential viewpoint or the hypothetical viewpoint. If you are talking about things that don't exist, you simply take the hypothetical viewpoint: If F's exist, then they are G. Although this seems like a simple enough fix, there is an unexpected consequence. The Square of Opposition is gutted. Many of its connections are lost. And so we are left with the traditional Square of Opposition if we are taking the existential viewpoint, and the modern Square of Opposition when we are taking the hypothetical viewpoint (pictured below).

Important Concepts

Next time...

Be sure to complete the homework for this lesson diligently. Catch-up on any lessons you haven't covered. Review if necessary. Drink lots of coffee. Do whatever you need to do to get ready for the toughest part of this unit...

FYI

Homework!

Footnotes

1. On a technical/historical note, Aristotle himself didn't use the image of the Square of Opposition that we are using. Rather, this image was developed during the Roman imperial age using Aristotle's observations. But almost all of the relations were already present in Aristotle's work. In De Interpretatione, Aristotle discusses contraries and contradictories; in Topics, he discusses subalternation. Subcontraries were, however, an idea provided by later logicians. The Square of Opposition first appears in a commentary of Aristotle's De Interpretatione written by Apuleius of Madauros, written in the second century CE (Shenefelt and White 2013: 54n8).

2. I might add that this state of affairs was confined mostly to the West. As it turns out, other regions of the world were seeing a continued interest in mathematics, logic, philosophy, and the sciences, while Europe went into its Dark Age. For example, the Islamic empire grew so rapidly that they urgently needed administrators talented in mathematical and logical analysis to help hold the domains together. The Islamic rulers gave energetic support to these studies. "Books were hunted far and wide, and gifted translators like Thabit ibn Qurra (836-901) were found and brought to work in Baghdad... So diligent were the Arabs in those enlightened times that we often know of Greek works only because an Arabic translation survives" (Gray 2003: 41).

HOMEWORK for Unit i, Lesson 4: The Square of Opposition

Note: Click here for a PDF of this assignment.

Assessing Categorical Arguments for Validity: Immediate Inferences Edition

Directions: Use the Traditional Square of Opposition to check if the following one-premise arguments are valid or invalid.

Some cookie-eaters are cookie monsters. So it is false, that no cookie-eaters are cookie monsters.

It is false that some cookies are turnips. So, no cookies are turnips.

It is false that some cookies are not delicious. So, it is false that no cookies are delicious.

It is false that all cookies are metallic. So, it is true that some cookies are metallic.

Some cookies are not splurps. So, no cookies are splurps.

Some cookie monsters are cookie lovers. So, all cookie monsters are cookie lovers.

It is false that no dogs are cookie eaters; hence, it is false that all dogs are cookie eaters.

Categorical Logic 2.0

We endeavour to employ only symmetrical figures, such as should not only be an aid to reasoning, through the sense of sight, but should also be to some extent elegant in themselves.

~John Venn

From Athens to Great Britain

As discussed in the last lesson, Aristotle's logic was taught in the West basically without opposition (and without any major updates) until the 19th century. Insofar as logic is a method for assessing arguments, categorical logic didn't need much improvement. In fact, some of the updates made to it in the 19th century, as evidenced in the epigraph above, were driven by a desire to make inferences not only valid, but also aesthetically pleasing. That being said, there were some substantive changes on the horizon.1

The updates made to logic were from George Boole, whom we met last time, and John Venn, both of which were British logicians and mathematicians. We'll be looking at these updates in the lessons to come. Today, however, we will move on to mediate inferences, or two-premise arguments of categorical logic. More commonly, these are referred to as categorical syllogisms, as you'll learn in the Important Concepts below. We will begin by standardizing these and then use ancient techniques in assessing them for validity. These methods require us to discover the mood and figure of the syllogisms in question to see if they are valid or not, a method pioneered by Aristotle himself. Then we will move on to the more modern Venn diagrams.

Important Concepts

Mood and Figure

Here are the steps for assessing for validity using mood and figure:

- Put each individual categorical proposition into standard form: quantifier, subject class, copula, and predicate class.

-

Make sure the syllogism as a whole is in standard form. That is,

- the syllogism is composed of three standard-form categorical statements;

- the subject term of the conclusion (the minor term) must occur once and only once in the second premise, and not in the first premise;

- the predicate term of the conclusion (the major term) must occur once and only once in the first premise, and not in the second premise;

- the other term in the first premise (the middle term) must also occur in the second premise.

- Label the argument according to its mood and figure (see Important Concepts above).

- If an argument's mood and figure is on this table, then the argument is valid. If not, then the argument is invalid.

Food for thought...

Circles, circles, circles...2

“Another revolutionary development in nineteenth-century logic was the discovery, by the English logician and philosopher John Venn (1834-1923), of a radically new way to show that a categorical syllogism is valid. Venn’s method allows us to visually represent the information content of categorical sentences, in such a way that we can actually see the relations between the sentences of a syllogism” (Herrick 2013: 176; emphasis added).3

Here are the steps for Venn diagrams that we'll be following:

- Abbreviate.Abbreviate. Abbreviate the argument, replacing each category with a single capital letter.

- Draw and Label. Label the circles (with capital letters) using the minor term (the subject term of the conclusion) for the lower left circle, the major term (the predicate term of the conclusion) for the lower right circle, and the middle term (the remaining class) on the middle circle.

- Decide on Order. If the argument contains a universal premise, enter its information first. If the argument contains two universal premises or two particular premises, order doesn’t matter.

- Enter Premise Information. Universal statements get shaded. Particular statements get an X.

- Note: When placing an X in an area, if one part of the area has been shaded, place the X in an unshaded part. When placing an X in an area, if a circle’s line runs through the area, place the X directly on the line separating the area into two regions. In other words, the X must “straddle” the line, hanging over both sides equally. An X straddling a line means that, for all we know, the individual represented by the X might be on either side of the line, or on both sides; in other words, it is not known which side of the line the X is actually on.

- Remember to decide if you are taking the hypothetical viewpoint. Look at the two circles standing for the subject terms of your premises. If these terms refer to existing things, and if there is only one region unshaded in either or both circles, place an X in that unshaded region (thereby presenting the existential viewpoint). If these terms refer to things that do not exist or the arguer does not wish to assume exist, then you are finished (thereby presenting the hypothetical viewpoint). Repeat for the middle and predicate terms.

- Assess for validity. A categorical syllogism is valid if, by diagramming only the premises, we have also diagrammed the information found in the conclusion. A categorical syllogism is invalid if, when we have diagrammed the information content of the premises, information must be added to the diagram to represent the information content of the conclusion.

- CAUTION: If no X or shading appears in an area, this does not say that nothing exists in the area; rather, it indicates that nothing is known about the area. Again, it only means we have no information about the area. Thus, for all we know, the area might be empty, or it might contain one or more things.

- Label the argument valid or invalid.

- Look over your work.

FYI

Assessing Categorical Arguments for Validity: Venn Diagram Edition

Directions: Use either the mood and figure method or the Venn diagram Method to determine whether the following categorical syllogisms are valid. (Here's the table for mood and figure.)

Note that some arguments may be out of order, placing the conclusion before the premises. Use conclusion indicators, like "therefore" and "so", to help you identify the conclusion. Premise indicators, like "since" will help you identify premises.

- All philosophers are lovers of truth. No lovers of truth are close-minded people. So, no philosophers are close-minded people.

- No dogs are cats, and no fish are dogs. So some cats are fish

- All dogs can sing, and no singers can swim. Therefore, no dogs can swim.

- All anteaters eat hymenoptera. Therefore some Myrmecophagidae are anteaters, since some eaters of hymenoptera are Myrmecophagidae.

- Some scavengers are fish, but some dogs aren’t fish. Therefore, some dogs aren’t scavengers.

- All goats are cute. All small mammals are cute. So, all small mammals are goats.

- Some actors are sculptors. Some poets are not actors. So, some poets are not sculptors.

- All A are B. All A are C. Thus, all C are B.

- Some A are not C, and all C are B. Thus, some A are not B.

- Some B are C. Some B are not A. Therefore, Some A are C.

Tablet Time!

Footnotes

1. I might add that other thinkers of the time were also driven by an appeal to beauty as opposed to experimental data. Physicist James Clerk Maxwell (1831-1879), for example, famous for being the first to hypothesize that a changing electrical charge creates a magnetic field, arrived at this view not through experimental data, but because he was bothered that nature would otherwise be asymmetrical. In other words, for Maxwell, it was a matter of aesthetics that drove him to his conclusion (see Wolfson 2000, Lecture 4).

2. The techniques used here as essentially those of Herrick (2013), chapter 9 in particular. I also, however, am using tips and verbiage found in Monge (2017).

3. I might add that John Venn truly stood on the shoulders of giants with regards to his discovery of another method of assessing for validity. As it turns out, none other than Swiss mathematician Leonhard Euler had offered a series of diagrams also comprised of circles to express the import behind the four sentences of categorical logic. This work was done in the 18th century and was improved upon by the 19th century French mathematician J. D. Gergonne (see Shenefelt and White 2013: 60-64). This, of course, takes nothing away from John Venn's aesthetically pleasing work, which is the culmination of these analyses.

HOMEWORK FOR UNIT I, LESSON 5: Categorical Logic 2.0

Assessing Categorical Arguments for Validity: Venn Diagram Edition

Directions: Use either the mood and figure method or the Venn diagram Method to determine whether the following categorical syllogisms are valid. (Here's the table for mood and figure.)

Note: Some arguments may be out of order, placing the conclusion before the premises. Use conclusion indicators, like "therefore" and "so", to help you identify the conclusion. Premise indicators, like "since" will help you identify premises.

All philosophers are lovers of truth. No lovers of truth are close-minded people. So, no philosophers are close-minded people.

No dogs are cats, and no fish are dogs. So some cats are fish

All dogs can sing, and no singers can swim. Therefore, no dogs can swim.

All anteaters eat hymenoptera. Therefore some Myrmecophagidae are anteaters, since some eaters of hymenoptera are Myrmecophagidae.

Some scavengers are fish, but some dogs aren’t fish. Therefore, some dogs aren’t scavengers.

All goats are cute. All small mammals are cute. So, all small mammals are goats.

Some actors are sculptors. Some poets are not actors. So, some poets are not sculptors.

All A are B. All A are C. Thus, all C are B.

Some A are not C, and all C are B. Thus, some A are not B.

Some B are C. Some B are not A. Therefore, Some A are C.

Logic and Computing

Persistence is often more important than intelligence. Approaching material with a goal of learning it on your own gives you a unique path to mastery.

~Barbara Oakley

Tips on Learning

In her 2014 A Mind for Numbers, Barbara Oakley discusses the strategies that she and other accomplished instructors teach their students to prepare for STEM classes. These skills, however, could be applied to any challenging field. In fact, even chess players use these. If taken to heart, these techniques can help you succeed in acquiring new skills in challenging disciplines, including logic. As such, Oakley's work is invaluable for students who are starting their academic careers. Let's briefly review some of her recommendations.

In chapter 6, Oakley discusses the phenomenon of “choking”, the feeling of being overwhelmed during a test, making it so that none of the information that you need will come to mind. Many students report this happening to them when they have failed a test. Choking occurs when we have overloaded our working memory. To prevent this, we must take enough time to “chunk”, or integrate one or more concepts into a smoothly connected working thought pattern. In order to avoid this, you should give yourself several days to learn tough concepts and use retrieval practice during your study. That is to say, read slowly and, after each paragraph (or less), pause to say outloud what the main idea of what you just read is. You can also do this with homework problems. Don't just rush to finish them. Explain to yourself the reasoning behind your solution.

In chapter 11, Oakley discusses the role of working memory. To excel in your studies (and life), use memory tricks to become proficient at remembering important information. We’re good at remembering physical environments, like our homes. Whenever possible, “link” what you’re learning to physical locations and things. If you're learning physiology, perhaps the layers of the skin could be the layers of your house. If you're learning logic rules, you can make analogies to everyday household items, like we will later with the analogy of the lock and key. You can also carry pictures of flash cards of these connections with you when you workout, since working out regularly has been shown to improve memory. Take a glance before starting your training to prime your brain to think about those things in the background of your mind. It works!

Tips also found in chapter 11 include utilizing our predilection for music as an aid in our studies. Little songs that you make up might help you remember some important concepts or equations. The sillier the better. Oakley reminds us to not worry about being weird; some of the most brilliant thinkers were not very normal people. Also, sometimes a location can evoke a certain feeling. You can invoke certain memories by evoking this feeling. If you studied a lot in the library, visualize yourself in the library before starting a test.

Other tips:

- Make your own questions.

- Apply the concepts you’re learning to your life.

- Doodle visual metaphors for hard concepts.

- Review just before sleep and upon waking.

Level III: Sorites

For references, here are the two mains steps for working out sorites. First, make sure the argument is in standard form. This means that you must make sure that:

- All statements are standard-form categorical statements.

- Each term occurs twice.

- The predicate term of the conclusion appears in the first premise.

- Every statement up to the conclusion has a term in common with the statement immediately following.

Once this has been done, follow these steps:

- Pair together two premises that have a term in common and derive an intermediate conclusion. This conclusion should have a term in common with one of the unused statements in the sorites.

- Pair together these two statements and draw a conclusion from this second pair.

- Repeat until all premises have been used.

- Evaluate each individual syllogism.

Rule: If each individual syllogism is valid, the sorites is valid. If even one syllogism in the chain is invalid, the sorites is invalid.

For more information, check out the video below.

Storytime!

Computation today...

I'd like to add a caveat to this happy history of logic and computation. As if we need a reminder, the history of civilization isn't always in the "up" direction. Sometimes civilization takes a few steps back; sometimes it's several steps. Our experiments with computational devices are only just beginning, and there is no guarantee that there will be a happy ending. For example, in a recent study, researchers presented machine learning experts with 70 occupations and asked them about the likelihood that they will be automated. The researchers then used a probability model to project how susceptible to automation an additional 632 jobs were. The result: it is technically feasible to do about 47% of jobs with machines (Frey & Osborne 2013).

The jobs at risk of robotization are both low-skill and high-skill jobs. On the low-skill end, it is possible to fully automate most of the tasks performed by truckers, cashiers, and line cooks. It is conceivable that the automation of other low-skill jobs, like those of security guards and parcel delivery workers, could be accelerated due to the global COVID-19 pandemic.

High-skill workers won't be getting off the hook either, though. Many of the tasks performed by a paralegal could be automated. The same could be said for accountants.

Are these jobs doomed? Hold on a second... The researchers behind another study disputed Frey and Osborne’s methodology, arguing that it’s not the entire job that will be automated but separate tasks within the job role more generally. After reassessing, their result was that only about 38% could be done by machines...

It gets worse...1

The Road Ahead

In the Storytime! above, we introduced some historical figures that will play a role in the development of logic that we will cover in this course, namely Alan Turing. Historically speaking, though, the most important group to discuss at this juncture is the Stoic school of Philosophy. It's true. In Aristotle's own time, there were criticisms to his approach to reasoning coming from a group of people that would sit at the stoa (steps) of the agora (marketplace). Stay tuned.

A hark, which is

definitely not real.

For now, we can admit that Aristotle's logic has some weaknesses. First off, once Boole introduced the concept of the hypothetical viewpoint, there emerges an unappealing relativity: some arguments are valid depending on whether or not you're taking the existential viewpoint or the hypothetical viewpoint. Not only is there something inelegant about this, it also makes it so that Aristotle's highly abstracted categorical statements (like "All S are P") are somehow "missing" information. How would we know if "All S are P" refers to actually existing things or not? There must be a way for expressing which viewpoint we are taking within our symbolized statements, but Aristotle's logic does not have a convention for this.

Second, Aristotle believed that all propositions could be forced into standard form for categorical statements. But as you might've noticed, this is really counterintuitive in some cases. For example, consider the sentence “All except truckers are happy with the new regulations”. To accurately convey the information in this expression, one must use two standard form categorical statements:

- All non-truckers are persons who are happy with the new regulations.

- No truckers are persons who are happy with the new regulations.

It goes without saying that this is extremely counterintuitive, inelegant, and cumbersome.

And so we take the next step in our introduction to the history of logic. We now take a look at the chief rival to Aristotle’s Categorical Logic: truth-functional logic.

FYI

Related Material—

Video: TEDTalks, The wonderful and terrifying implications of computers that can learn | Jeremy Howard

Reading: Nick Bostrom, SuperIntelligence (Answer to the 2009 EDGE QUESTION: “WHAT WILL CHANGE EVERYTHING?”)

Footnotes

1. The interested student can refer to literature on the potential of artificial intelligence becoming an existential risk to humans. The most famous example of this is the work of Nick Bostrom, particularly his Superintelligence: Paths, dangers, strategies.

TL (Pt. I)

Wise people are in want of nothing, and yet need many things. On the other hand, nothing is needed by fools, for they do not understand how to use anything, but are in want of everything.

~Chrysippus

Chrysippus: the second greatest logician of antiquity

The Stoic School of Philosophy, generally speaking, was founded by Zeno of Citium in Athens in the early 3rd century BCE. They are mostly known for their ethical views, as you will learn in the Storytime! below. It was Chrysippus of Soli (279-206 BCE), who around 230 BCE took charge of the Stoic school, that initiated its formal studies into logic. In typical Greek intellectual fashion, Chrysippus didn't follow Aristotle's approach blindly; Chrysippus wanted to move past the writings of "the master" (as Aristotle was sometimes called). And so Chrysippus analyzed the notion of truth. Before diving into Chrysippus' insights, however, let's get some context.

So far, the emphasis of our logical analysis has been on categories. But the Stoic school of logic conceived of reasoning in a wholly different way. They broke down reasoning not to the level of categories, but to the level of complete declarative sentences. Chrysippus realized that some sentences had a special property: they could either be true or false. Call sentences that have this property truth-functional sentences. It's important to note that not all sentences exhibit this property. It makes no sense, for example, to say that an exclamation is true. We noted this back in Unit I. Instead, it appears that declarative sentences, sentences that describe a state of affairs, are the only type of sentence that is truth-functional. This is why Chrysippus's logic is called truth-functional logic: it is the logical analysis of complete declarative sentences.

Truth-functional logic is also known as propositional logic. A proposition is the thought expressed by a declarative sentence. This might be tough to get a grasp on first, so let me give you an example. Consider the following set of sentences:

- "Snow is white."

- "La neige est blanche."

- "La nieve es blanca."

- "Schnee ist weiß."

This set contains four sentences. That's clear enough. But how many propositions does it contain? In fact, it is only one proposition. This is because this is the same thought expressed in four different languages. These sentences, moreover, are clearly different from sentences like "What’s a pizookie?", "Close the door!", and "AHH!!!!!". It just simply is the case that we cannot reasonably apply the label of true or false to a question, a command, or an exclamation, the way we can to a declarative sentence.

Another way of thinking about propositions relates to the language of thought, which is sometimes (jokingly) referred to as Brainish or Mentalese. Have you ever had a thought but couldn't quite find the words to express yourself? Some linguists, like Steven Pinker, might say that you have the proposition in the language of thought, and you are searching for the natural language—say, English—to "clothe" your proposition in so that you can communicate this idea.1

One last note that might be of interest here: it was actually the Stoics that gave logic its name:

“Aristotle might be the founder of logic as an academic discipline, but it was the Stoic philosophers who gave the subject its actual name. At the research institute founded by Aristotle, the Lyceum, the subject apparently had no formal title. After the death of Aristotle, his logic texts were bundled together as a unit known only as the ‘organon’ or ‘tool of thought.’ Early in the third century BCE, logicians in the Stoic school of philosophy, also located in Athens, Greece, named the subject after the Greek term logos (which could mean ‘word’, ‘speech’, ‘reason’, or even ‘the underlying principle of the cosmos’)” (Herrick 2013: 211).

Storytime!

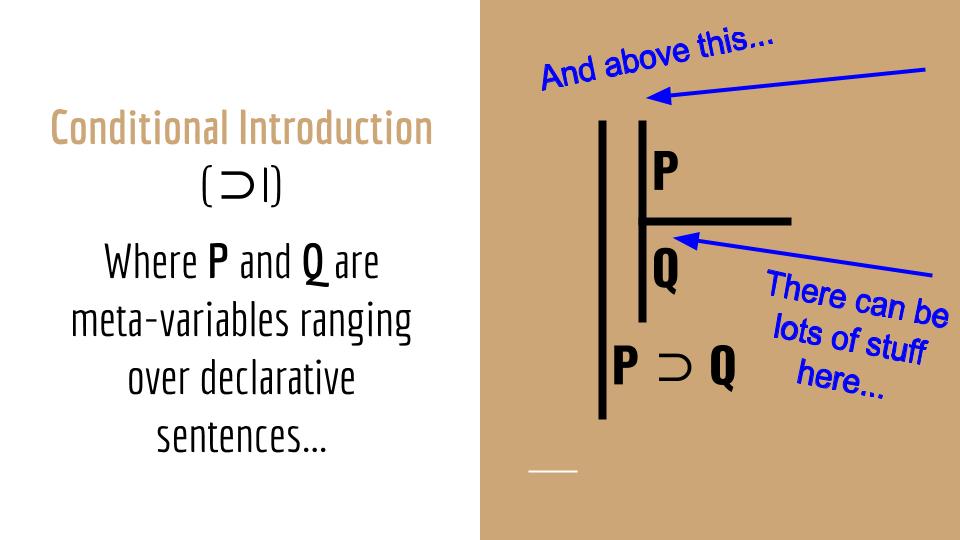

Important Concepts

The Great Insight

We've been hinting at Chrysippus' great insight, and now it's time to make it clear. Here is what Chrysippus realized. Truth-values and truth-functions reside within compound sentences with truth-functional connectives. In other words, there are logical connectives—like "and" and "or"—that establish certain relationships between the component sentences as well as for the compound sentence as a whole. Let's take a look at these relationships so you can see what I mean.

Let's start with negations. A negation is a sentence, either simple or compound, whose main logical operator negates the whole thing. For example, take the simple sentence "James is home". To negate this sentence would be to say something like "It's not the case that James is home". Now here's a question: Is this statement, "It's not the case that James is home", true or false? That depends. If a sentence negated is true, the negation as a whole is false; and if a sentence negated is false, the negation as a whole is true. In other words, if "James is home" is true, then the whole compound ("It's not the case that James is home") is false. But if "James is home" is false, then the compound ("It's not the case that James is home") is true.

Let's move on to conjunctions. A conjunction is simply an and-statement, like "James is home, and Sabrina is at the store." In a conjunction, the component sentences are called conjuncts. The relationship established here is that only when both conjuncts are true is the whole compound true. In other words, the compound ("James is home, and Sabrina is at the store") is only true if both "James is home" is true and "Sabrina is at the store" is true.

A disjunction is an or-statement, with the component sentences being referred to as disjuncts. It is important to note that the disjunctions that we will be covering is the inclusive "or". In other words, disjunctions, for our purposes, will be true either when at least one of the disjuncts is true, including the case where they are both true. Only when both disjuncts are false will the whole compound be false.

Conditionals are if/then-statements, like "If James is home, then Sabrina is at the store." The antecedent is the component sentence in the if-half of the sentence, in this case "James is home", and the consequent is the component sentence in the then-half of the sentence, "Sabrina is at the store." The truth-function of conditionals is a little counterintuitive, but here's the basic idea. When the antecedent is true and the consequent is false, the conditional as a whole is false. All other times, the conditional as a whole is true.

Sidebar

Students sometimes have trouble with this truth-function. Perhaps the way you can think about conditionals is as "rules". Here in the U.S.A., the legal drinking age is 21. So, generally speaking, we assume the following "rule": If someone is drinking, then they are over 21. How would we "falsify" or violate this rule? Well, if we saw someone drinking and learned they were 21, all is well; the rule is not being violated. If we saw someone not drinking and learned that they were 21, this wouldn't affect our rule in any way. Similarly, if we saw someone not drinking and learned that they were under 21, then the rule still stands. It's only in the case where it's true that someone is drinking and it's false that they are 21 or older that the rule is being violated. That's the basic intuition behind when a conditional is false: it's only when the antecedent is true and the consequent is false that a conditional as a whole is false.

The last truth-functional compound we will be looking at is the biconditional, or if-and-only-if-statement. This relationship is such that only when the truth-values match is the compound true. The analogy that I like to use when teaching this concept is that of a contract. Consider the sentence "You’re divorced if, and only if, you've signed the divorce papers." If one of the component sentences is true, then so is the other. Similarly, if one is false, then so is the other. So, only when the truth-values of the component sentences match is the whole compound true; if they don't match, the compound is false.

Leaving natural language behind...