The Chinese Room

Achilles:

That is a weird notion. It means that although there is frantic activity occurring in my brain at all times, I am only capable of

registering that activity in one way—on the symbol level; and I am completely insensitive to lower levels. It is like being able to read a Dickens novel

by direct visual perception, without ever having learned the letters of the alphabet. I can't imagine anything as weird as that really happening.

~Douglas Hofstadter1

What is Artificial Intelligence?

This is actually a difficult question to answer. Various theorists lament over the “moving goal posts” for what counts as artificial intelligence (e.g., Hofstadter 1979: 26). The basic complaint is that once some particular task which appears to be sufficient for intelligence (of some sort) has been mastered by a computer, then (all of a sudden) that particular task is no longer sufficient for intelligence. Hofstadter even has a name for this tendency, which he named after Larry Tesler—apparently the first person to point out the trend to him. Tesler's theorem states that AI is whatever hasn't been done yet (ibid., 621).

There are two things to point out here. First, the goal of AI research really has evolved over time. In the early days of AI, the goal really was to create a machine that was capable of thinking (even if people couldn't agree on what thinking meant). Today, AI researchers (in general) define the goal of their field much more narrowly. For example, Eliezer Yudkowsky (2015) defines intelligence, very abstractly, as the capacity to attain goals in a flexible way. So, AI researchers typically work within a narrow domain: perhaps they work on image recognition, or natural language processing, or sentiment analysis, etc. In other words, today's AIs are essentially good at one, or perhaps a handful of tasks; but they're not capable of solving problems in general (the way humans are capable of). If a researcher is working on developing an artificial intelligence that is good at solving problems generally, then he/she is said to be working on an artificial general intelligence. But, according to AI pioneer Marvin Minsky, most aren't working on that. Minsky, in fact, lamented the direction of the field of AI until the day he died in 2016 (see Sejnowski 2018, chapter 17).

Second, there certainly is a lack of coordination between different disciplines and standardizing their jargon. Take for example the dominant paradigm of AI research from the mid-1950s until the late 1980s: symbolic artificial intelligence. Symbolic artificial intelligence is an umbrella term that captures all the methods in artificial intelligence research that are based on high-level "symbolic" formal procedures. It is based on the assumption that many or all aspects of intelligence can be achieved by the manipulation of symbols, as in first-order logic; this assumption was dubbed as the “physical symbol systems hypothesis” (see Newell and Simon 1976). However, this is just the predecessor of a view advocated by the philosopher Jerry Fodor—a view he called the representational theory of mind, where Turing-style computation is performed on a language of thought. Notice the proliferation of labels(!): the physical symbol systems hypothesis, the representational theory of mind. So, given this muddy conceptual landscape, it is perhaps not too surprising that a solid conception of what intelligence is was never decided on.

The rise and fall of the physical symbol systems hypothesis

AI pioneers at

Dartmouth College.

The field of AI is said to have begun in the summer of 1956 at Dartmouth College. That summer, ten scientists interested in neural nets, automata theory, and the study of intelligence convened for six weeks and initiated the study of artificial intelligence. During this early era of AI, the practice typically involved refuting claims about machine intelligence in limited domains. For example, it was said that a machine could not make logical proofs, and so the AI practitioners made a machine called “the logic theorist” which proved most of the theorems of chapter 2 of Whitehead and Russell’s Principia Mathematica. It even made one proof much more elegant than Whitehead and Russell’s version.

During these early days, at least some AI researchers believed in the physical symbol system hypothesis, hereafter PSSH. In a nutshell, PSSH is the view that Turing-style computation over certain symbols is all that is needed in order to complete some task. Moreover, any system that could engage in this symbol-crunching to perform some task could be said to be intelligent (see Newell and Simon 1976: 86-90). In other words, any machine that could process the code that performs some task has some kind of intelligence. In fact, some practitioners who assumed the physical symbol systems hypothesis (e.g., Newell and Simon) believed that once they programmed their machines to perform some particular task, then the code for that task is the explanation of the processes behind that task. In other words, if you want to understand how some task is performed, looking at the code for a machine that can perform that task is as good as any explanation you're going to find.

Clearly, though, reading the code that enables some task to be performed by a machine, say play chess, does not actually help you understand the mental processes behind playing chess. It's a little like if I were to give you some brain scan print outs of your own brain and then asked you what you were thinking at that moment. Sure, your thoughts are had by your brain. But even if you could see all the different patterns of neurons being activated, you won't really know what precise thought that corresponds to. Try it yourself. Can you tell what's being thought of in the image below? My guess is "no". So the advocates of the physical symbol systems hypothesis were lacking when it came to what they meant by "explanation".

There are various reasons why PSSH is no longer the dominant paradigm in AI research. One of them is practical. There was an upper limit to what can be accomplished with the physical symbol systems hypothesis. Everything that a machine did had to be meticulously programmed by a team of researchers. This is a bit of an oversimplification, but basically the machine was only as smart as the programmers who coded it.

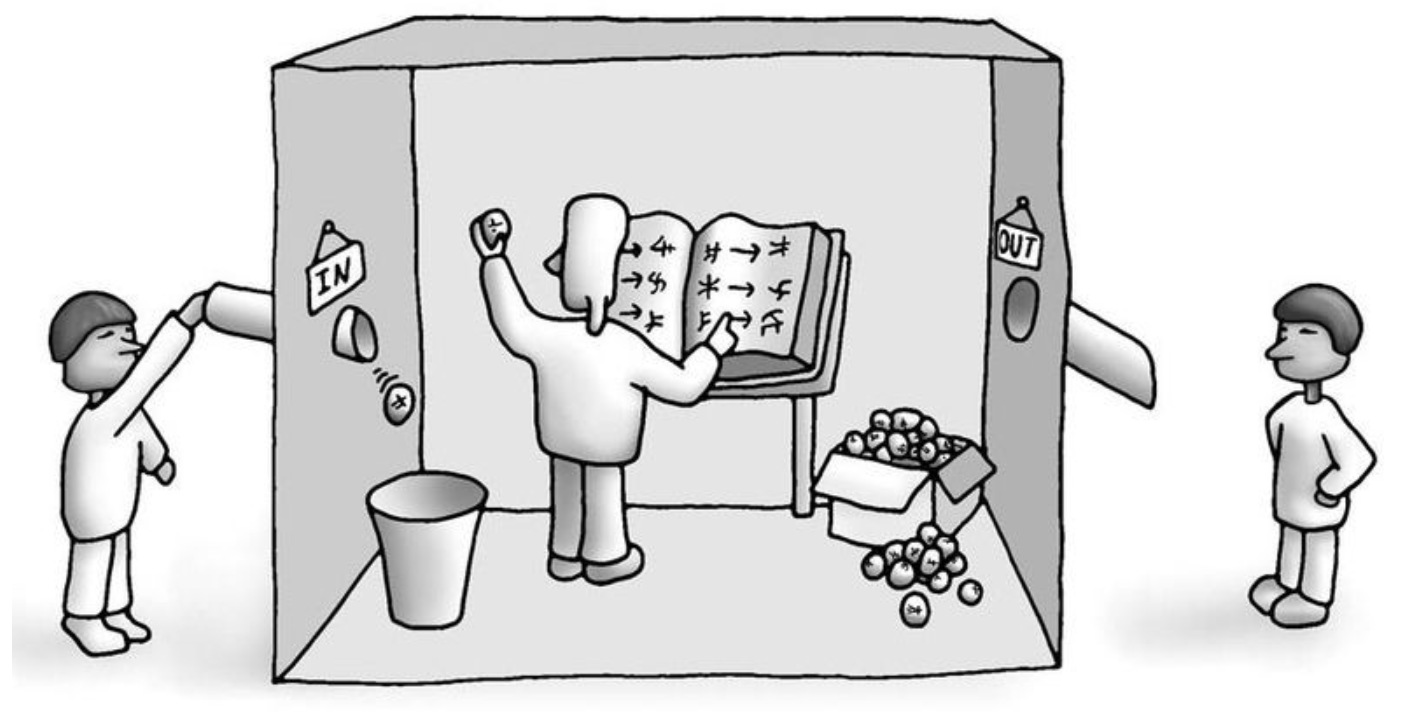

There were also philosophical/theoretical objections to the physical symbol systems hypothesis. One of the most famous ones was by John Searle. Searle asked you to imagine yourself in a room with a large rule book and where you received messages from the outside world through a little slot on the wall. All the messages received were in Mandarin Chinese (and let's pretend that you don't know anything about Mandarin for the sake of this example). Your task was to receive the message from the slot, go to the rule book to find the symbol that you just received, and then follow the arrows to see which symbol you were supposed to respond with. Once you figured out which symbol should be output, you send out that symbol through the slot and wait for a new message. As long as you are performing your role correctly, any outsider would think that you are a well-functioning machine. But ask yourself this: would you have any idea what's going on? Would you understand a single word of the messages going in and out? Of course not.

“It seems to me obvious in the example that I do not understand a word of Chinese stories. I have inputs and outputs that are indistinguishable from those of the native Chinese speaker, and I can have any formal program you like, but I still understand nothing. Schank’s computer, for the same reasons, understands nothing of any stories, whether in Chinese, English, or whatever…” (Searle 1980: 186).

What does Searle conclude? Searle clearly believes that machines can perform narrowly-defined tasks (i.e., weak AI). But Searle, through his thought-experiment, is denying the possibility of strong artificial intelligence under the assumption of the physical symbol system hypothesis. In other words, symbolic AI will never give you thinking machines.

“Whatever purely formal principles you put into the computer will not be sufficient for understanding, since a human will be able to follow the formal principles without understanding anything, and no reason has been offered to suppose that they are necessary or even contributory, since no reason has been given to suppose that when I understand English, I am operating with any formal program at all” (Searle 1980: 187).

But that was 1980...

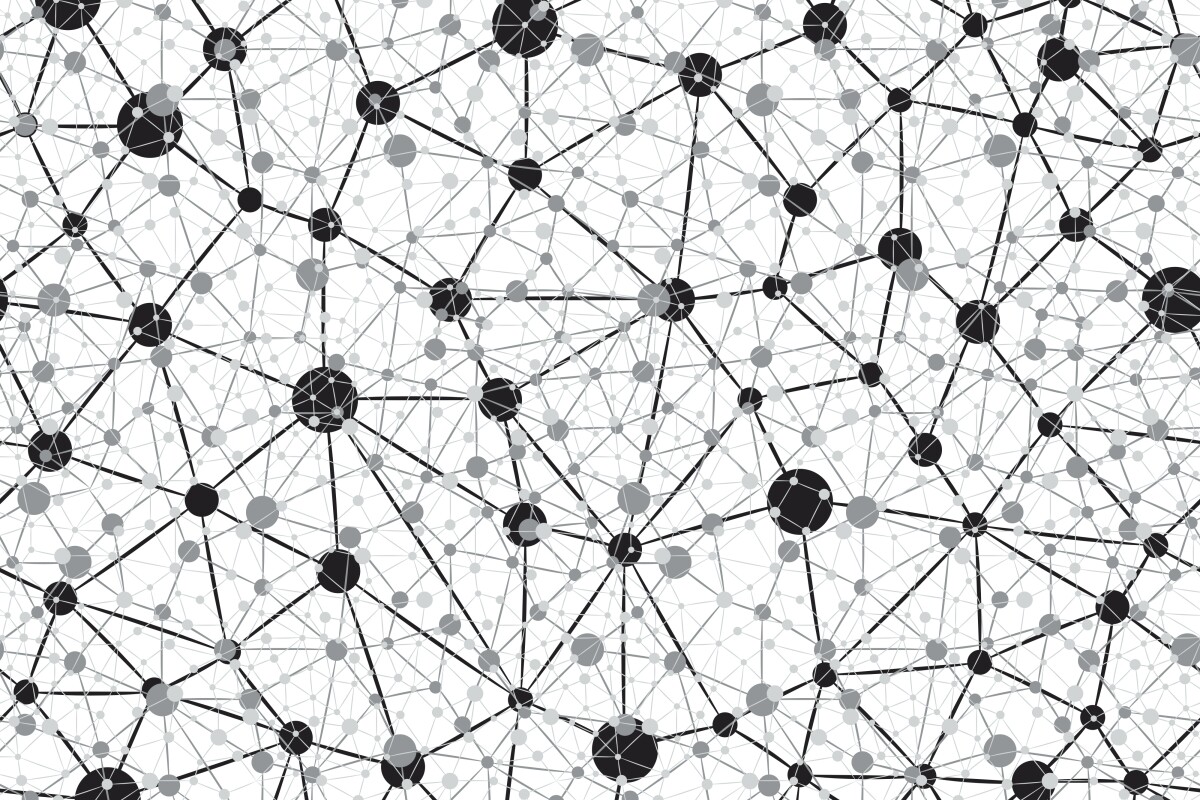

Today, we are in the age of machine learning. Machine learning (ML) is an approach to artificial intelligence where the machine is allowed to develop its own algorithm through the use of training data. Sejnowski claims that ML became the dominant paradigm in AI in 2000. Since 2012, the dominant method in ML is deep learning. The most distinctive feature of ML (and deep learning) is its use of neural networks, as opposed to Turing-style deterministic computation. Please enjoy this helpful video to wrap your mind around neural networks:

To be clear, deep learning has been around since the 1960's (with ML in general being around since the 1950's) as a rival to the symbolic artificial intelligence paradigm. Interestingly, according to Sejnowski (2018, chapter 17), it was the work of an AI pioneer, Marvin Minsky, that steered the field of AI away from the statistically-driven approach of machine learning and towards deterministic Turing-style computation. In particular, Sejnowski argues that Minsky’s (erroneous) assessment of the capabilities of the perceptron (an early machine learning algorithm) derailed machine learning research for a generation. It was not until the late 1980s that the field of AI gave ML a second chance—and it has had radical success. ML, unlike symbolic AI, is based on subsymbolic statistical algorithms and was inspired by biological neural networks. In other words, AI researchers are plagiarizing Mother Nature: they are taking inspiration from how neurons in a brain are connected. If you've ever heard the phrase "Neurons that fire together wire together", this is essentially what machine learning algorithms are doing. This is why they are called artificial neural networks. Early neural networks had one hidden layer, but deep neural networks today have several hidden layers (see image). And the results are shocking...

Important Concepts

Decoding Connectionism

/cloudfront-us-east-1.images.arcpublishing.com/mco/GTEUDWH56JAEPE3NVL3MWEG3FQ.jpg)

Defining AI (Take II)

The deep learning revolution is just beginning, and we'll have to wait and see just what will happen. However, given the dominance of deep learning, we must attempt to redefine what we mean by AI once more. For his part, Yudkowsky (2008: 311) argues that artificial intelligence refers to “a vastly greater space of possibilities than does the term Homo sapiens. When we talk about ‘AIs’ we are really talking about minds-in-general, or optimization processes in general” (italics in original, emphasis added). We'll be assuming this definition for the rest of the lesson: AI refers to optimization processes.

And so symbolic AI is no longer dominant. It is now referred to as Good Old-Fashioned AI (GOFAI). Deep learning is in. But the future is uncertain. And there is reason to long for the good ol' days...

Best case scenario...

Various forms of automation

Sidebar: Scientific Management

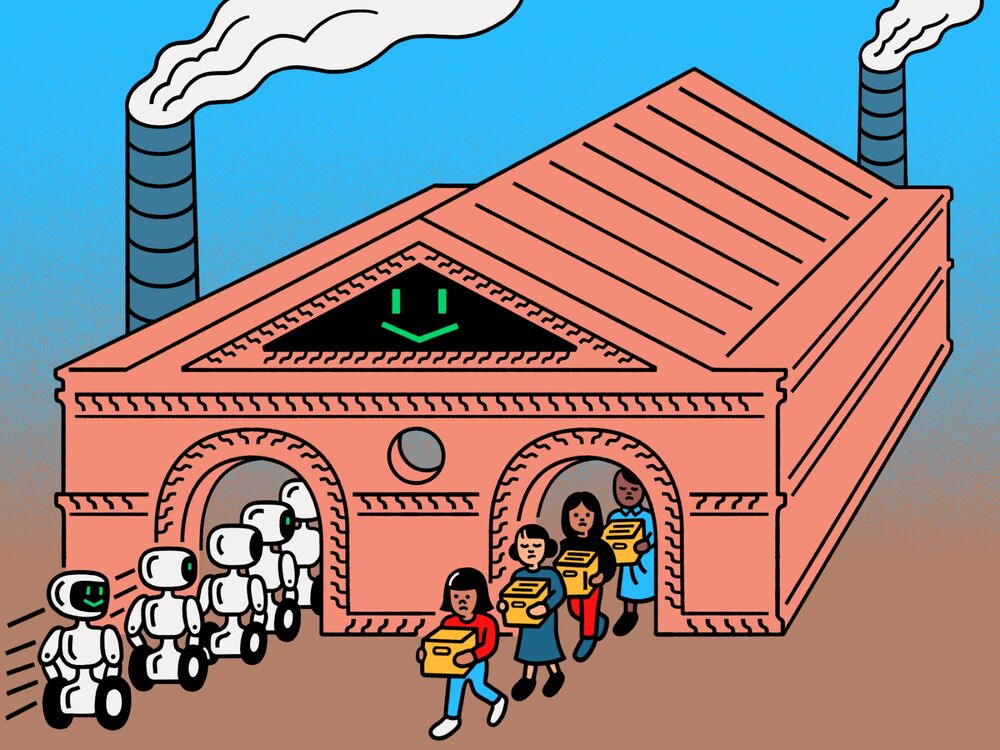

The threat of automation is sometimes downplayed by those who, correctly, remind us that machine learning is only successful within very narrow domains. In other words, ML-trained robots are essentially good at one, or perhaps a handful of things. This argument, however, misses a key development in labor in the 20th century. Thanks to Taylorism and Fordism, many manufacturing and industrial labor roles are themselves confined to very narrow domains. In the push to increase efficiency, scientific management made laborers dumber (as Adam Smith argued) and also more like machines, in that they basically perform just a few skills repetitively, day after day. Of course, a machine can do that. Moreover, even partial automation should worry you... It’s already the case that efficiency algorithms are being used in the workplace, not to replace workers but managers. This approach is taking Taylorism to the next level. Here's the context...

The "evolution" of management...

Frederick Taylor,

1856-1915.

To understand this issue, we must first understand the history of management. Taylorism, also known as "scientific management", is a factory management system developed in the late 19th century by Frederick Taylor. His goal was to increase efficiency by evaluating every individual step in a manufacturing process and timing the fastest speed at which it could be performed satisfactorily. Then he added the times needed to perform a set of tasks for a given worker and set that as their time goal. In other words, workers had to perform their assigned tasks as quickly as it is feasibly possible to perform them consistently throughout the day not accounting for naturally-occurring human fatigue. Taylor, and then later Ford, also pioneered the breaking down of the production process into specialized, repetitive tasks.

Scientific management, however, may in fact have deeper and more sinister roots. In his The Half Has Never Been Told, Edward Baptist devotes chapter 4 to various aspects of slave labor in the early 19th century. Most relevant here, is that the push and quota system, where slaves were whipped if they didn’t reach their daily goal and the goal is progressively increased as time passes, was devised during this time period. With the exception of the whipping, this is not terribly dissimilar from factory management in the 20th and 21st century. Workers are pushed with the threat of getting their hours cut if they don't perform at a level satisfactory to the managers, and the quota system is what led to such scandals as that of the Wells Fargo quota scandal.2

This is not just hyperbole. The Cold War, which has had numerous effects on social life in the United States, unsurprisingly, also had an effect on management. During the late 1960's there was widespread agitation and unrest among the working classes and disenfranchised racial groups in America. Their radical message, at least of the working class, was reminiscent of the views of a romantic early Karl Marx, emphasizing an engaged revolutionary stance that sought for creative release, not the technocracy of the Soviet Union. Their fundamental complaint was that the threat of hot war with the Soviet Union had ushered in a military-industrial welfare state, with an emphasis on continued high-pace industrialization, under a strict managerial hierarchy and in which workers submitted to the scientific management philosophies on Taylorism and Fordism. The work was alienating, and although it was perhaps justifiable during a time of hot war with a major power, that had not come to be. So, the activists fought for an end to this approach (see Priestland 2010, chapter 11).

Back to the 21st century...

How does partial automation make things worse for us today? When viewed in the context of its history, scientific management is a way for maximally exploiting an employee's labor power. Emily Guendelsberger (2019) gives various examples of how companies are using efficiency algorithms for scheduling and micromanaging which have adverse effects on workers. Think about it. Your boss might be overbearing enough as it is. Imagine that instead your boss was an automated system that could keep track of your daily tasks down to the microsecond. It drives people to physical collapse. Click here for an interview of Guendelsberger.

Food for thought...

Does this mean war?

Is it possible that a growing and disaffected lower class will revolt and attack the upper classes? It wouldn't be the first time this happened in history...

Perhaps there's something that can be done, however, as a sort of a "pressure release". Although some political candidates have recently advocated for a universal basic income to address income inequality, Kai-fu Lee (2018, chapter 9) lays out his vision of a social investment stipend. The universal basic income, Lee argues, will only handle bare minimum necessities but will do nothing to assuage the loss of meaning and social cohesion that will come from a jobless economy. This is where Lee's social investment stipend comes in. These stipends can be awarded to compassionate healthcare workers, teachers, artists, students who record oral histories from the elderly, the service sector, botanists who explain indigenous flora and fauna to visitors, etc. By promoting and raising the social status of those that promote social cohesion and emphasize human empathy we can build an empathy-based, post-capitalist economy.

Or else... What's the alternative?

To be continued...

FYI

Suggested Reading: John Searle, Minds, Brains and Programs

TL;DR: 60-Second Adventures in Thought, The Chinese Room

Supplemental Material—

- Reading: Cameron Buckner and James Garson, Stanford Encyclopedia of Philosophy Entry on Connectionism

- Video: Hans Dooremalen, Connectionism

Related Material—

- Video: The London Real, David Graeber On Bullsh*t Jobs

- Podcast: The Intercept, OMNICIDAL TENDENCIES: THE NUCLEAR PRESIDENCY OF DONALD TRUMP, Interview of Emily Guendelsberger.

- Note: The interview begins around 48:30. The interested student should also check out Guendelsberger's book, On the Clock: What Low-Wage Work Did to Me and How It Drives America Insane.

Advanced Material—

-

Eliezer Yudkowsky, Artificial Intelligence as a Positive and Negative Factor in Global Risk

Footnotes

1. This is from one of Douglas Hofstadter's dialogues between Achilles and Mr. Tortoise which can be found in his Gödel, Escher, Bach: an Eternal Golden Braid (see p. 328 of the 1999 edition for quote).

2. The interested student can check out season 2, episode 2 of the Netflix series Dirty Money for more on the Wells Fargo scandal.